服务器规划

| OS | 规格 | 主机名 | IP | VIP | private IP | scanip |

| centos 7.9 | 1C4G | racdb01 | 192.168.40.165 | 192.168.183.165 | 192.168.40.16 | 192.168.40.200 |

| centos 7.9 | 1C4G | racdb02 | 192.168.40.175 | 192.168.183.175 | 192.168.40.17 | 192.168.40.200 |

| centos 7.9 | 1C4G | racdb03 | 192.168.40.185 | 192.168.183.185 | 192.168.40.18 | 192.168.40.200 |

Linux服务器配置

时区设置

根据客户标准设置 OS 时区,国内通常为东八区"Asia/Shanghai".

在安装 GRID 之前,一定要先修改好 OS 时区,否则 GRID 将引用一个错误的 OS 时区,导致 DB 的时区,监听的时区等不正确。

--每个节点都要查

[root@racdb01 grid]# timedatectl status

Local time: Tue 2024-05-21 17:38:36 CST

Universal time: Tue 2024-05-21 09:38:36 UTC

RTC time: Tue 2024-05-21 09:38:36

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: no

RTC in local TZ: no

DST active: n/a

修改OS时区:

timedatectl set-timezone "Asia/Shanghai"

配置主机名

--节点1 hostnamectl set-hostname racdb01 exec bash --节点2 hostnamectl set-hostname racdb02 exec bash --节点3 hostnamectl set-hostname racdb03 exec bash

hosts文件配置

cat >> /etc/hosts << EOF 192.168.40.165 racdb01 192.168.40.175 racdb02 192.168.40.185 racdb03 192.168.183.165 racdb01_privatevip 192.168.183.175 racdb02_privatevip 192.168.183.185 racdb03_privatevip 192.168.40.16 racdb01_vitureip 192.168.40.17 racdb02_vitureip 192.168.40.18 racdb03_vitureip 192.168.40.200 racdbscan01 ##安装时注意集群名不要超过 15 个字符,也不能有大写主机名。 EOF

配置语言环境

echo "export LANG=en_US" >> ~/.bash_profile source ~/.bash_profile

创建用户、组、目录

--创建用户、组 /usr/sbin/groupadd -g 50001 oinstall /usr/sbin/groupadd -g 50002 dba /usr/sbin/groupadd -g 50003 oper /usr/sbin/groupadd -g 50004 asmadmin /usr/sbin/groupadd -g 50005 asmoper /usr/sbin/groupadd -g 50006 asmdba /usr/sbin/useradd -u 60001 -g oinstall -G dba,asmdba,oper oracle /usr/sbin/useradd -u 60002 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid echo grid | passwd --stdin grid echo oracle | passwd --stdin oracle --创建目录 mkdir -p /oracle/app/grid mkdir -p /oracle/app/11.2.0/grid chown -R grid:oinstall /oracle mkdir -p /oracle/app/oraInventory chown -R grid:oinstall /oracle/app/oraInventory mkdir -p /oracle/app/oracle chown -R oracle:oinstall /oracle/app/oracle chmod -R 775 /oracle

配置yum软件安装环境及软件包安装

#配置本地yum源

mount /dev/cdrom /mnt

cd /etc/yum.repos.d

mkdir bk

mv *.repo bk/

cat > /etc/yum.repos.d/Centos7.repo << "EOF"

[local]

name=Centos7

baseurl=file:///mnt

gpgcheck=0

enabled=1

EOF

cat /etc/yum.repos.d/Centos7.repo

#安装所需的软件

yum -y install autoconf

yum -y install automake

yum -y install binutils

yum -y install binutils-devel

yum -y install bison

yum -y install cpp

yum -y install dos2unix

yum -y install gcc

yum -y install gcc-c++

yum -y install lrzsz

yum -y install python-devel

yum -y install compat-db*

yum -y install compat-gcc-34

yum -y install compat-gcc-34-c++

yum -y install compat-libcap1

yum -y install compat-libstdc++-33

yum -y install compat-libstdc++-33.i686

yum -y install glibc-*

yum -y install glibc-*.i686

yum -y install libXpm-*.i686

yum -y install libXp.so.6

yum -y install libXt.so.6

yum -y install libXtst.so.6

yum -y install libXext

yum -y install libXext.i686

yum -y install libXtst

yum -y install libXtst.i686

yum -y install libX11

yum -y install libX11.i686

yum -y install libXau

yum -y install libXau.i686

yum -y install libxcb

yum -y install libxcb.i686

yum -y install libXi

yum -y install libXi.i686

yum -y install libXtst

yum -y install libstdc++-docs

yum -y install libgcc_s.so.1

yum -y install libstdc++.i686

yum -y install libstdc++-devel

yum -y install libstdc++-devel.i686

yum -y install libaio

yum -y install libaio.i686

yum -y install libaio-devel

yum -y install libaio-devel.i686

yum -y install libXp

yum -y install libaio-devel

yum -y install numactl

yum -y install numactl-devel

yum -y install make

yum -y install sysstat

yum -y install unixODBC

yum -y install unixODBC-devel

yum -y install elfutils-libelf-devel-0.97

yum -y install elfutils-libelf-devel

yum -y install redhat-lsb-core

yum -y install unzip

yum -y install *vnc*

# 安装Linux图像界面

yum groupinstall -y "X Window System"

yum groupinstall -y "GNOME Desktop" "Graphical Administration Tools"

#检查包的安装情况

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n'

安装依赖包

rpm -ivh compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm rpm -ivh rpm -ivh pdksh-5.2.14-37.el5_8.1.x86_64.rpm 如果提示和ksh冲突执行如下操作先卸载ksh然后再安装pdksh依赖包 rpm -evh ksh-20120801-139.el7.x86_64 rpm -ivh pdksh-5.2.14-37.el5.x86_64.rpm

修改系统相关参数

修改系统资源限制参数

vi /etc/security/limits.conf #ORACLE SETTING grid soft nproc 16384 grid hard nproc 16384 grid soft nofile 65536 grid hard nofile 65536 grid soft stack 32768 grid hard stack 32768 oracle soft nproc 16384 oracle hard nproc 16384 oracle soft nofile 65536 oracle hard nofile 65536 oracle soft stack 32768 oracle hard stack 32768 oracle hard memlock 2000000 oracle soft memlock 2000000 ulimit -a # nproc 操作系统对用户创建进程数的限制 # nofile 文件描述符 一个文件同时打开的会话数 也就是一个进程能够打开多少个文件 # memlock 内存锁,给oracle用户使用的最大内存,单位是KB 当前环境的物理内存为4G(grid 1g,操作系统 1g,我们给oracle留2g),memlock<物理内存

修改nproc参数

echo "* - nproc 16384" > /etc/security/limits.d/90-nproc.conf

控制给用户分配的资源

echo "session required pam_limits.so" >> /etc/pam.d/login cat /etc/pam.d/login

修改内核参数

vi /etc/sysctl.conf #ORACLE SETTING fs.aio-max-nr = 1048576 fs.file-max = 6815744 kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 9000 65500 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048586 kernel.panic_on_oops = 1 vm.nr_hugepages = 868 kernel.shmmax = 1610612736 kernel.shmall = 393216 kernel.shmmni = 4096 sysctl -p

参数说明:

--kernel.panic_on_oops = 1

程序出问题,是否继续

--vm.nr_hugepages = 1000

大内存页,物理内存超过8g,必设

经验值:sga_max_size/2m+(100~500)=1536/2m+100=868

>sga_max_size

--kernel.shmmax = 1610612736

定义单个共享内存段的最大值,一定要存放下整个SGA,>SGA

SGA+PGA <物理内存的80%

SGA_max<物理内存的80%的80%

PGA_max<物理内存的80%的20%

kernel.shmall = 393216

--控制共享内存的页数 =kernel.shmmax/PAGESIZE

getconf PAGESIZE --获取内存页大小 4096

kernel.shmmni = 4096

--共享内存段的数量,一个实例就是一个内存共享段

--物理内存(KB)

os_memory_total=$(awk '/MemTotal/{print $2}' /proc/meminfo)

--获取系统页面大小,用于计算内存总量

pagesize=$(getconf PAGE_SIZE)

min_free_kbytes = $os_memory_total / 250

shmall = ($os_memory_total - 1) * 1024 / $pagesize

shmmax = $os_memory_total * 1024 - 1

# 如果 shmall 小于 2097152,则将其设为 2097152

(($shmall < 2097152)) && shmall=2097152

# 如果 shmmax 小于 4294967295,则将其设为 4294967295

(($shmmax < 4294967295)) && shmmax=4294967295

关闭透明页

cat /proc/meminfo cat /sys/kernel/mm/transparent_hugepage/defrag [always] madvise never cat /sys/kernel/mm/transparent_hugepage/enabled [always] madvise never vi /etc/rc.d/rc.local if test -f /sys/kernel/mm/transparent_hugepage/enabled; then echo never > /sys/kernel/mm/transparent_hugepage/enabled fi if test -f /sys/kernel/mm/transparent_hugepage/defrag; then echo never > /sys/kernel/mm/transparent_hugepage/defrag fi chmod +x /etc/rc.d/rc.local

关闭numa功能

numactl --hardware vim /etc/default/grub GRUB_CMDLINE_LINUX="crashkernel=auto rhgb quiet numa=off" grub2-mkconfig -o /boot/grub2/grub.cfg #vi /boot/grub/grub.conf #kernel /boot/vmlinuz-2.6.18-128.1.16.0.1.el5 root=LABEL=DBSYS ro bootarea=dbsys rhgb quiet console=ttyS0,115200n8 console=tty1 crashkernel=128M@16M numa=off

设置字符界面启动操作系统

systemctl set-default multi-user.target

共享内存段

[root@testosa ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 93G 1.9G 91G 2% / devtmpfs 1.9G 0 1.9G 0% /dev tmpfs 1.9G 0 1.9G 0% /dev/shm #/dev/shm 默认是操作系统物理内存的一半,我们设置大一点 echo "tmpfs /dev/shm tmpfs defaults,size=3072m 0 0" >>/etc/fstab mount -o remount /dev/shm [root@testosa ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 93G 2.3G 91G 3% / devtmpfs 1.9G 0 1.9G 0% /dev tmpfs 3.0G 0 3.0G 0% /dev/shm

检查或配置交换空间

若swap>=2G,跳过该步骤,

若swap=0,则执行以下操作

# 创建指定大小的空文件 /swapfile,并将其格式化为交换分区

dd if=/dev/zero of=/swapfile bs=2G count=1

# 设置文件权限为 0600

chmod 600 /swapfile

# 格式化文件为 Swap 分区

mkswap /swapfile

# 启用 Swap 分区

swapon /swapfile

# 将 Swap 分区信息添加到 /etc/fstab 文件中,以便系统重启后自动加载

echo "/swapfile none swap sw 0 0" >>/etc/fstab

mount -a

--查看内存 已经有swap了

[root@racdb03 tmp]# free -g

total used free shared buff/cache available

Mem: 3 1 1 0 0 1

Swap: 3 0 3

配置安全

#1、禁用SELINUX sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config setenforce 0 #2、关闭防火墙 systemctl stop firewalld systemctl disable firewalld

禁用NTP

--停止NTP服务 systemctl stop ntpd systemctl disable ntpd --删除配置文件 mv /etc/ntp.conf /etc/ntp.conf_bak_20240521 --设置时间三台主机的时间要一样,如果一样就不用再设置 date -s 'Sat Aug 26 23:18:15 CST 2023'

禁用DNS

因为测试环境,没有使用DNS,删除resolv.conf文件即可。或者直接忽略该失败

mv /etc/resolv.conf /etc/resolv.conf_bak

配置grid/oracle 用户环境变量

grid用户

su - grid

#节点1:

cat >> ~/.bash_profile << "EOF"

PS1="[`whoami`@`hostname`:"'$PWD]$'

export PS1

umask 022

#alias sqlplus="rlwrap sqlplus"

export TMP=/tmp

export LANG=en_US

export TMPDIR=$TMP

ORACLE_SID=+ASM1; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

ORACLE_BASE=/oracle/app/grid; export ORACLE_BASE

ORACLE_HOME=/oracle/app/11.2.0/grid; export ORACLE_HOME

NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS"; export NLS_DATE_FORMAT

PATH=.:$PATH:$HOME/bin:$ORACLE_HOME/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

#节点2:

cat >> ~/.bash_profile << "EOF"

PS1="[`whoami`@`hostname`:"'$PWD]$'

export PS1

umask 022

#alias sqlplus="rlwrap sqlplus"

export TMP=/tmp

export LANG=en_US

export TMPDIR=$TMP

ORACLE_SID=+ASM2; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

ORACLE_BASE=/oracle/app/grid; export ORACLE_BASE

ORACLE_HOME=/oracle/app/11.2.0/grid; export ORACLE_HOME

NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS"; export NLS_DATE_FORMAT

PATH=.:$PATH:$HOME/bin:$ORACLE_HOME/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

#节点3:

cat >> ~/.bash_profile << "EOF"

PS1="[`whoami`@`hostname`:"'$PWD]$'

export PS1

umask 022

#alias sqlplus="rlwrap sqlplus"

export TMP=/tmp

export LANG=en_US

export TMPDIR=$TMP

ORACLE_SID=+ASM3; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

ORACLE_BASE=/oracle/app/grid; export ORACLE_BASE

ORACLE_HOME=/oracle/app/11.2.0/grid; export ORACLE_HOME

NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS"; export NLS_DATE_FORMAT

PATH=.:$PATH:$HOME/bin:$ORACLE_HOME/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

oracle用户

su - oracle

#节点1:

cat >> ~/.bash_profile << "EOF"

PS1="[`whoami`@`hostname`:"'$PWD]$'

#alias sqlplus="rlwrap sqlplus"

#alias rman="rlwrap rman"

export PS1

export TMP=/tmp

export LANG=en_US

export TMPDIR=$TMP

export ORACLE_UNQNAME=racdb01

ORACLE_BASE=/oracle/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1; export ORACLE_HOME

ORACLE_SID=rac_db1; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS"; export NLS_DATE_FORMAT

NLS_LANG=AMERICAN_AMERICA.ZHS16GBK;export NLS_LANG

PATH=.:$PATH:$HOME/bin:$ORACLE_BASE/product/11.2.0/db_1/bin:$ORACLE_HOME/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

#节点2:

cat >> ~/.bash_profile << "EOF"

PS1="[`whoami`@`hostname`:"'$PWD]$'

#alias sqlplus="rlwrap sqlplus"

#alias rman="rlwrap rman"

export PS1

export TMP=/tmp

export LANG=en_US

export TMPDIR=$TMP

export ORACLE_UNQNAME=racdb02

ORACLE_BASE=/oracle/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1; export ORACLE_HOME

ORACLE_SID=rac_db2; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS"; export NLS_DATE_FORMAT

NLS_LANG=AMERICAN_AMERICA.ZHS16GBK;export NLS_LANG

PATH=.:$PATH:$HOME/bin:$ORACLE_BASE/product/11.2.0/db_1/bin:$ORACLE_HOME/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

#节点3:

cat >> ~/.bash_profile << "EOF"

PS1="[`whoami`@`hostname`:"'$PWD]$'

#alias sqlplus="rlwrap sqlplus"

#alias rman="rlwrap rman"

export PS1

export TMP=/tmp

export LANG=en_US

export TMPDIR=$TMP

export ORACLE_UNQNAME=rac_db

ORACLE_BASE=/oracle/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1; export ORACLE_HOME

ORACLE_SID=racdb03; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS"; export NLS_DATE_FORMAT

NLS_LANG=AMERICAN_AMERICA.ZHS16GBK;export NLS_LANG

PATH=.:$PATH:$HOME/bin:$ORACLE_BASE/product/11.2.0/db_1/bin:$ORACLE_HOME/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

EOF

配置SSH信任关系

📎ssh.sh

#下载脚本

wget https://gitcode.net/myneth/tools/-/raw/master/tool/ssh.sh

chmod +x ssh.sh

#执行互信

./ssh.sh -user grid -hosts "racdb01 racdb02 racdb03" -advanced -exverify -confirm

./ssh.sh -user oracle -hosts "racdb01 racdb02 racdb03" -advanced -exverify -confirm

chmod 600 /home/grid/.ssh/config

chmod 600 /home/oracle/.ssh/config

#检查互信

su - grid

for i in racdb{01,02,03};do

ssh $i hostname

done

su - oracle

for i in racdb{01,02,03};do

ssh $i hostname

done

RAC 共享存储规划与配置

共享磁盘规划

grid 1G*3

data 10G*1 5G*2

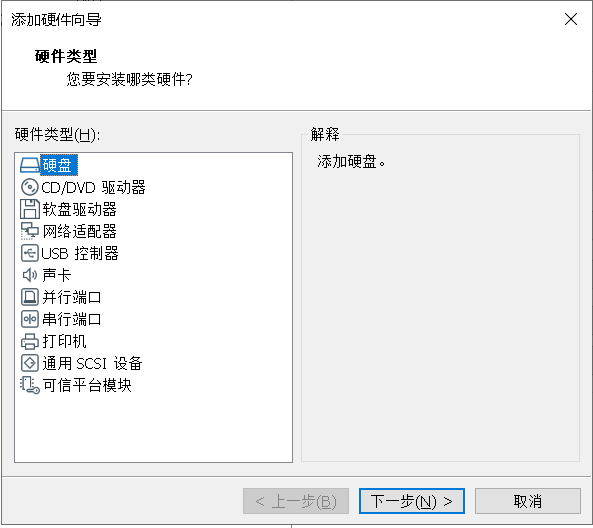

VMvare workstation创建共享磁盘

在安装VMware软件的操作系统上,以管理员权限打开命令行工具cmd,进入到计划存放共享磁盘的目录,如 E:\Program Files (x86)\VMware\rac11g_asmlib_3节点\racdb01 下,在节点1目录下创建共享磁盘;

创建共享存储

1个节点操作,剩余2个节点不用操作。

--进入存放共享磁盘的目录

C:\Users\Administrator>e:

E:\>cd Program Files (x86)\VMware\rac11g_asmlib_3节点\racdb01

--创建共享磁盘

E:\"Program Files (x86)"\VMware\"VMware Workstation"\vmware-vdiskmanager -c -s 1GB -a lsilogic -t 4 shared-asm01.vmdk

E:\"Program Files (x86)"\VMware\"VMware Workstation"\vmware-vdiskmanager -c -s 1GB -a lsilogic -t 4 shared-asm02.vmdk

E:\"Program Files (x86)"\VMware\"VMware Workstation"\vmware-vdiskmanager -c -s 1GB -a lsilogic -t 4 shared-asm03.vmdk

E:\"Program Files (x86)"\VMware\"VMware Workstation"\vmware-vdiskmanager -c -s 5GB -a lsilogic -t 4 shared-asm04.vmdk

E:\"Program Files (x86)"\VMware\"VMware Workstation"\vmware-vdiskmanager -c -s 5GB -a lsilogic -t 4 shared-asm05.vmdk

E:\"Program Files (x86)"\VMware\"VMware Workstation"\vmware-vdiskmanager -c -s 10GB -a lsilogic -t 4 shared-asm06.vmdk

--查看已创建的共享磁盘

E:\Program Files (x86)\VMware\rac11g_asmlib_3节点\racdb01>dir shared-asm*

驱动器 E 中的卷没有标签。

卷的序列号是 9EDB-69A6

E:\Program Files (x86)\VMware\rac11g_asmlib_3节点\racdb01 的目录

2024/05/20 20:11 1,073,741,824 shared-asm01-flat.vmdk

2024/05/20 20:11 476 shared-asm01.vmdk

2024/05/20 20:12 1,073,741,824 shared-asm02-flat.vmdk

2024/05/20 20:12 476 shared-asm02.vmdk

2024/05/20 20:13 1,073,741,824 shared-asm03-flat.vmdk

2024/05/20 20:13 476 shared-asm03.vmdk

2024/05/20 20:13 5,368,709,120 shared-asm04-flat.vmdk

2024/05/20 20:13 477 shared-asm04.vmdk

2024/05/20 20:13 5,368,709,120 shared-asm05-flat.vmdk

2024/05/20 20:13 477 shared-asm05.vmdk

2024/05/20 20:13 10,737,418,240 shared-asm06-flat.vmdk

2024/05/20 20:13 478 shared-asm06.vmdk

12 个文件 24,696,064,812 字节

0 个目录 474,241,511,424 可用字节

挂载共享存储(每台主机都操作)

每个节点保持关机状态。

检查虚拟机配置文件

racdb01.vmx racdb02.vmx racdb03.vmx #保证配置文件中有以下内容 disk.locking = "FALSE" disk.EnableUUID = "TRUE"

启动每台服务器检查磁盘信息

--节点1 [root@racdb01 ~]# fdisk -l|grep 'Disk /dev/s'|sort Disk /dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors Disk /dev/sdb: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sdc: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sdd: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sde: 5368 MB, 5368709120 bytes, 10485760 sectors Disk /dev/sdf: 5368 MB, 5368709120 bytes, 10485760 sectors Disk /dev/sdg: 10.7 GB, 10737418240 bytes, 20971520 sectors --节点2 [root@racdb02 ~]# fdisk -l|grep 'Disk /dev/s'|sort Disk /dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors Disk /dev/sdb: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sdc: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sdd: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sde: 5368 MB, 5368709120 bytes, 10485760 sectors Disk /dev/sdf: 5368 MB, 5368709120 bytes, 10485760 sectors Disk /dev/sdg: 10.7 GB, 10737418240 bytes, 20971520 sectors --节点3 [root@racdb03 ~]# fdisk -l|grep 'Disk /dev/s'|sort Disk /dev/sda: 42.9 GB, 42949672960 bytes, 83886080 sectors Disk /dev/sdb: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sdc: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sdd: 1073 MB, 1073741824 bytes, 2097152 sectors Disk /dev/sde: 5368 MB, 5368709120 bytes, 10485760 sectors Disk /dev/sdf: 5368 MB, 5368709120 bytes, 10485760 sectors Disk /dev/sdg: 10.7 GB, 10737418240 bytes, 20971520 sectors

ASM共享磁盘配置

磁盘使用方式

- raw --(裸设备) 使用不方便

- asmlib -- Oracle推出的,解决裸设备不方便的问题 本次使用asmlib方式

- udev --动态设备管理

asmlib工具安装

注意事项:不同操作系统,rpm包版本不同,下载的时候注意。

下载工具包

cd /etc/yum.repos.d wget http://www.rpmfind.net/linux/centos/7.9.2009/os/x86_64/Packages/kmod-oracleasm-2.0.8-28.el7.x86_64.rpm https://public-yum.oracle.com/repo/OracleLinux/OL7/latest/x86_64/getPackage/oracleasm-support-2.1.11-2.el7.x86_64.rpm https://download.oracle.com/otn_software/asmlib/oracleasmlib-2.0.12-1.el7.x86_64.rpm

补充:Oracle如何从官方渠道下载ASMLIB组件安装包

简介

ASMLib 由以下组件组成:

- 开源 (GPL) 内核模块包:kmod-oracleasm

- 开源 (GPL) 实用程序包:oracleasm-support

- 闭源(专有)库包:oracleasmlib

如果是Oracle Linux系统,并且是使用了带uek内核的方式启动的(uname -a确认),则只需要安装"oracleasm-support"和“oracleasmlib”即可。

uek,即Unbreakable Enterprise Kernel,牢不可破的企业内核.....,uek已经集成了Oracle ASMLib 内核驱动程序。

uek模式下通过命令"modinfo oracleasm"可以确认已经集成。

通过Oracle ASMLib Software Update and Support Policy (Doc ID 1089399.1)可以了解到 Oracle 支持的 Oracle Linux (OL)/Red Hat Enterprise Linux (RHEL) 和 Red Hat 支持的 Red Hat Enterprise Linux (RHEL) 上使用 ASMLib 和自动存储管理 (ASM) 的客户的支持政策和下载方式。

其他:Oracle Linux: How to Find ASMLib / Oracleasm RPMs (Doc ID 559055.1)

官方下载网址/方式

oracleasmlib软件下载

- Oracle Linux 7:Oracle ASMLib Downloads for Oracle Linux 7RedHat 7:Oracle ASMLib Downloads for Red Hat Enterprise Linux 7 (包含oracleasm-support,看链接实际上就是OL7的同一个软件包)

- Oracle Linux 6:Oracle ASMLib Downloads for Oracle Linux 6

RedHat 6:Oracle ASMLib Downloads for Red Hat Enterprise Linux Server 6 (包含oracleasm-support,oracleasm-support的下载链接有问题)

oracleasm-support软件下载

- Oracle Linux 7:Unbreakable Linux Network (ULN): Login 或者

Oracle Linux Yum Server 或者

Oracle Linux 7 (x86_64) Latest | Oracle, Software. Hardware. Complete. (上边是官方说明的去处,比较难找,直接用这个搜索oracleasm-support最方便)RedHat 7:见上述oracleasmlib。

- Oracle Linux 6:Unbreakable Linux Network (ULN): Login 或者

Oracle Linux Yum Server 或者

Oracle Linux 6 (x86_64) Latest | Oracle, Software. Hardware. Complete.RedHat 6:见上述oracleasmlib,链接有问题。或者直接点击下载:https://yum.oracle.com/repo/OracleLinux/OL6/latest/x86_64/getPackage/oracleasm-support-2.1.11-2.el6.x86_64.rpm除此之外,服务器能够联网,可以使用yum install --downloadonly --downloaddir=/tmp oracleasm-support也行。

kmod-oracleasm下载

RedHat或OracleLinux(非uek模式启动)则需要安装这个。

- Oracle Linux 7(非uek模式启动):Oracle Linux 7 (x86_64) Latest | Oracle, Software. Hardware. Complete.

- Oracle Linux 6(非uek模式启动):Oracle Linux 6 (x86_64) Latest | Oracle, Software. Hardware. Complete.

除此之外,服务器能够联网,可以使用yum install --downloadonly --downloaddir=/tmp kmod-oracleasm也行。

https://www.cnblogs.com/PiscesCanon/p/17967227

关于RedHat如何下载kmod-oracleasm

RedHat的在下载oracleasmlib页面已经说明,直接去到redhat进行下载,不过我没找到在线可用的yum源。RedHat官网有篇安装ASMLIB的示例也都是直接yum install kmod-install进行安装的(看这里)。

既然如此,就使用yum install --downloadonly --downloaddir=/tmp kmod-oracleasm方式下载。

但是需要先去红帽官网注册账号,然后激活订阅,注意激活订阅要使用开发者模式(免费):https://developers.redhat.com/products/rhel/download

注意:access.redhat.com(收费)。

然后在linux使用命令:

- subscription-manager register,进行登录,输入你的红帽用户名密码。

- subscription-manager list --available --all,列出激活的可用的订阅池

- subscription-manager attach --pool=上述可用订阅池的Pool ID

- yum install --downloadonly --downloaddir=/tmp kmod-oracleasm

- 去/tmp目录拿包即可

参考链接:https://www.cnblogs.com/PiscesCanon/p/17967227

上传asmlib工具的安装包

kmod-oracleasm-2.0.8-28.el7.x86_64.rpm oracleasmlib-2.0.12-1.el7.x86_64.rpm oracleasm-support-2.1.11-2.el7.x86_64.rpm

安装asmlib工具

所有节点都安装、配置

在Linux中,加载设备时,因加载顺序问题,共享磁盘在各节点的设备名可能会不一致。为解决这个问题,保证各节点的设备名一致,有两种方法:

1)使用asmlib创建asm磁盘,将会在各分区上做asm磁盘标识,以保证设备名称的唯一性

首先安装ASM内核软件包

在oracle官方网站下载asm的软件包安装到各节点主机上.

ASMLIB官方资源站点:Oracle Linux: Oracle ASMLib | Oracle Technology Network | Oracle

ASM: Automatic Storage Management

使用Oracle ASM管理数据库的存储,需要安装三个oracleasm相关的包,分别是

oracleasm-support(平台架构相关) oracleasm-support-2.1.11-2.el7.x86_64.rpm 官网下载

oracleasmlib(平台架构相关) oracleasmlib-2.0.12-1.el7.x86_64.rpm 官网下载

oracleasm(kernel相关), kmod-oracleasm-2.0.8-28.el7.x86_64.rpm iso中包含

由于上述三个ASM相关包是平台以及内核版本相关,要根据平台架构及操作系统的内核版本下载对应的版本,否则导致无法使用。

#安装 注意按照次序来 rpm -ivh oracleasm-support-2.1.11-2.el7.x86_64.rpm #systemctl enable oracleasm.service rpm -ivh kmod-oracleasm-2.0.8-28.el7.x86_64.rpm #有时候会很慢 最长时间近2个小时 建议/boot分区给2G rpm -ivh oracleasmlib-2.0.12-1.el7.x86_64.rpm #检查 [root@racdb01 opt]# rpm -qa | grep oracleasm kmod-oracleasm-2.0.8-28.el7.x86_64 oracleasmlib-2.0.12-1.el7.x86_64 oracleasm-support-2.1.11-2.el7.x86_64 注意:安装后重启所有节点,否则在配置asmlib可能出现无法加载asmlib模块错误

oracleasm 命令的默认路径为 /usr/sbin。以前版本中使用的 /etc/init.d 路径

配置asmlib驱动

所有节点都配置

#查看帮助信息 [root@icpspnet01 soft]# /usr/sbin/oracleasm -h --/etc/init.d/oracleasm -h 已弃用 Usage: oracleasm [--exec-path=

] [ ] oracleasm --exec-path oracleasm -h oracleasm -V The basic oracleasm commands are: configure Configure the Oracle Linux ASMLib driver init Load and initialize the ASMLib driver exit Stop the ASMLib driver scandisks Scan the system for Oracle ASMLib disks status Display the status of the Oracle ASMLib driver listdisks List known Oracle ASMLib disks listiids List the iid files deleteiids Delete the unused iid files querydisk Determine if a disk belongs to Oracle ASMlib createdisk Allocate a device for Oracle ASMLib use deletedisk Return a device to the operating system renamedisk Change the label of an Oracle ASMlib disk update-driver Download the latest ASMLib driver #查看状态 [root@icpspnet01]#/usr/sbin/oracleasm status --/etc/init.d/oracleasm status已弃用 Checking if ASM is loaded: no Checking if /dev/oracleasm is mounted: no #配置oracleasm驱动 [root@testosa ~]# oracleasm configure -i Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: grid Default group to own the driver interface []: asmadmin Start Oracle ASM library driver on boot (y/n) [n]: y Scan for Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done [root@testosa ~]# #挂载oracleasm模块 加载 oracleasm 内核模块 (必须操作) [root@testosa ~]# oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": oracleasm Configuring "oracleasm" to use device physical block size Mounting ASMlib driver filesystem: /dev/oracleasm [root@testosa ~]# #查看状态 [root@icpspnet01 soft]# oracleasm status Checking if ASM is loaded: yes Checking if /dev/oracleasm is mounted: yes 为磁盘创建分区

只在1个节点操作即可

#创建一个分区就行 fdisk /dev/sdc fdisk /dev/sdd fdisk /dev/sde fdisk /dev/sdf fdisk /dev/sdg fdisk /dev/sdh #查看创建的分区(sdb1~sdg1就是刚刚创建的分区) [root@racdb01 opt]# ls -lsa /dev/sd*1 0 brw-rw---- 1 root disk 8, 1 May 21 11:26 /dev/sda1 0 brw-rw---- 1 root disk 8, 17 May 21 12:44 /dev/sdb1 0 brw-rw---- 1 root disk 8, 33 May 21 12:44 /dev/sdc1 0 brw-rw---- 1 root disk 8, 49 May 21 12:44 /dev/sdd1 0 brw-rw---- 1 root disk 8, 65 May 21 12:44 /dev/sde1 0 brw-rw---- 1 root disk 8, 81 May 21 12:45 /dev/sdf1 0 brw-rw---- 1 root disk 8, 97 May 21 12:45 /dev/sdg1 #如果建立了分区但是查询不到使用以下命令处理 kpartx -a /dev/sdc OR: partprobe /dev/sdc

使用asmlib创建磁盘

只在1个节点操作即可

oracleasm createdisk ocr01 /dev/sdb1 oracleasm createdisk ocr02 /dev/sdc1 oracleasm createdisk ocr03 /dev/sdd1 oracleasm createdisk data01 /dev/sde1 oracleasm createdisk data02 /dev/sdf1 oracleasm createdisk data03 /dev/sdg1 [root@racdb03 bin]# oracleasm createdisk ocr01 /dev/sdb1 Writing disk header: done Instantiating disk: done [root@racdb03 bin]# oracleasm createdisk ocr02 /dev/sdc1 Writing disk header: done Instantiating disk: done [root@racdb03 bin]# oracleasm createdisk ocr03 /dev/sdd1 Writing disk header: done Instantiating disk: done [root@racdb03 bin]# oracleasm createdisk data01 /dev/sde1 Writing disk header: done Instantiating disk: done [root@racdb03 bin]# oracleasm createdisk data02 /dev/sdf1 Writing disk header: done Instantiating disk: done [root@racdb03 bin]# oracleasm createdisk data03 /dev/sdg1 Writing disk header: done Instantiating disk: done #查看创建的磁盘(注意权限) [root@racdb01 opt]# cd /dev/oracleasm/disks/ [root@racdb01 disks]# pwd /dev/oracleasm/disks [root@racdb01 disks]# ls -l total 0 brw-rw---- 1 grid asmadmin 8, 65 May 21 20:51 DATA01 brw-rw---- 1 grid asmadmin 8, 81 May 21 20:51 DATA02 brw-rw---- 1 grid asmadmin 8, 97 May 21 20:51 DATA03 brw-rw---- 1 grid asmadmin 8, 17 May 21 20:51 OCR01 brw-rw---- 1 grid asmadmin 8, 33 May 21 20:51 OCR02 brw-rw---- 1 grid asmadmin 8, 49 May 21 20:51 OCR03 --扩展 /etc/init.d/oracleasm deletedisk DATA01

问题处理

--问题描述 [root@racdb01 disks]# oracleasm createdisk ocr01 /dev/sdb1 Device "/dev/sdb1" is already labeled for ASM disk "OCR01" --解决办法 清理磁盘后头再次进行asm磁盘创建 dd if=/dev/zero of=/dev/sdb1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdc1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdd1 bs=1024 count=100 dd if=/dev/zero of=/dev/sde1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdf1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdg1 bs=1024 count=100

修改配置文件

所有节点都修改

vim /etc/sysconfig/oracleasm [root@racdb01 disks]# cat /etc/sysconfig/oracleasm|sed '/^$/d' # # This is a configuration file for automatic loading of the Oracle # Automatic Storage Management library kernel driver. It is generated # By running /etc/init.d/oracleasm configure. Please use that method # to modify this file # # ORACLEASM_ENABLED: 'true' means to load the driver on boot. ORACLEASM_ENABLED=true # ORACLEASM_UID: Default user owning the /dev/oracleasm mount point. ORACLEASM_UID=grid # ORACLEASM_GID: Default group owning the /dev/oracleasm mount point. ORACLEASM_GID=asmadmin # ORACLEASM_SCANBOOT: 'true' means scan for ASM disks on boot. ORACLEASM_SCANBOOT=true # ORACLEASM_SCANORDER: Matching patterns to order disk scanning ORACLEASM_SCANORDER="" # ORACLEASM_SCANEXCLUDE: Matching patterns to exclude disks from scan ORACLEASM_SCANEXCLUDE="sda" # ORACLEASM_SCAN_DIRECTORIES: Scan disks under these directories ORACLEASM_SCAN_DIRECTORIES="" # ORACLEASM_USE_LOGICAL_BLOCK_SIZE: 'true' means use the logical block size # reported by the underlying disk instead of the physical. The default # is 'false' ORACLEASM_USE_LOGICAL_BLOCK_SIZE=false

扫描和显示磁盘

所有节点

#扫描磁盘 [root@racdb01 disks]# oracleasm scandisks Reloading disk partitions: done Cleaning any stale ASM disks... Scanning system for ASM disks... #显示磁盘 [root@racdb01 disks]# oracleasm listdisks DATA01 DATA02 DATA03 OCR01 OCR02 OCR03

集群软件安装配置与ASM磁盘组创建

grid软件安装

解压安装介质并安装磁盘检测工具

--节点1 #软件下载 p13390677_112040_Linux-x86-64_3of7.zip cd /home/grid chown -R grid:oinstall p13390677_112040_Linux-x86-64_3of7.zip #切换到grid用户 su - grid #软件解压(grid用户操作) unzip /opt/p13390677_112040_Linux-x86-64_3of7.zip #切换root用户,先安装一个磁盘检测工具(在解压的文件夹里面找,其他节点也需要安装) [root@racdb01 ~]# rpm -ivh /home/grid/grid/rpm/cvuqdisk-1.0.9-1.rpm scp /home/grid/grid/rpm/cvuqdisk-1.0.9-1.rpm root@192.168.40.175:/opt/ scp /home/grid/grid/rpm/cvuqdisk-1.0.9-1.rpm root@192.168.40.185:/opt/ --节点2和节点3分别安装 rpm -ivh /opt/cvuqdisk-1.0.9-1.rpm

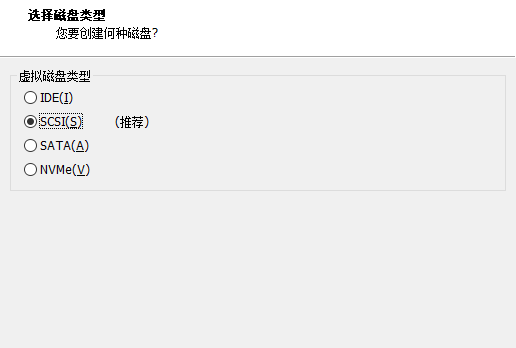

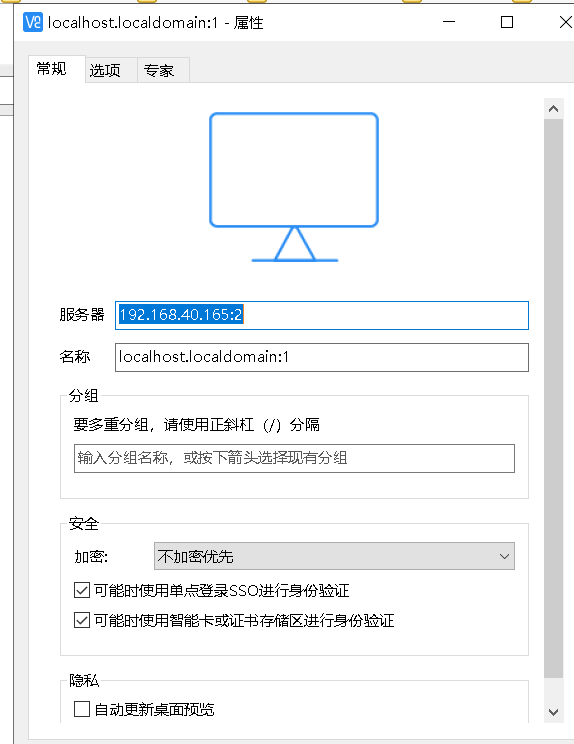

启动vnc

以grid用户启动vncserver

[root@racdb01 ~]# su - grid Last login: Tue May 21 13:14:45 CST 2024 on pts/0 vn[grid@racdb01:/home/grid]$vncserver You will require a password to access your desktops. Password: Verify: Would you like to enter a view-only password (y/n)? y Password: Verify: xauth: file /home/grid/.Xauthority does not exist New 'racdb01:1 (grid)' desktop is racdb01:1 Creating default startup script /home/grid/.vnc/xstartup Creating default config /home/grid/.vnc/config Starting applications specified in /home/grid/.vnc/xstartup Log file is /home/grid/.vnc/racdb01:1.log

调用vncserver使用图形化界面安装

远程使用vnc客户端,调用vncserver使用图形化界面安装

安装过程

日志位置:

/tmp/OraInstall2024-05-21_04-46-49-PM

/oracle/app/oraInventory/logs/installActions2024-05-22_10-04-06AM.log

--注意使用-jreLoc选项,否则界面里面,提示框不能放大 su - grid cd grid ./runInstaller -jreLoc /etc/alternatives/jre_1.8.0

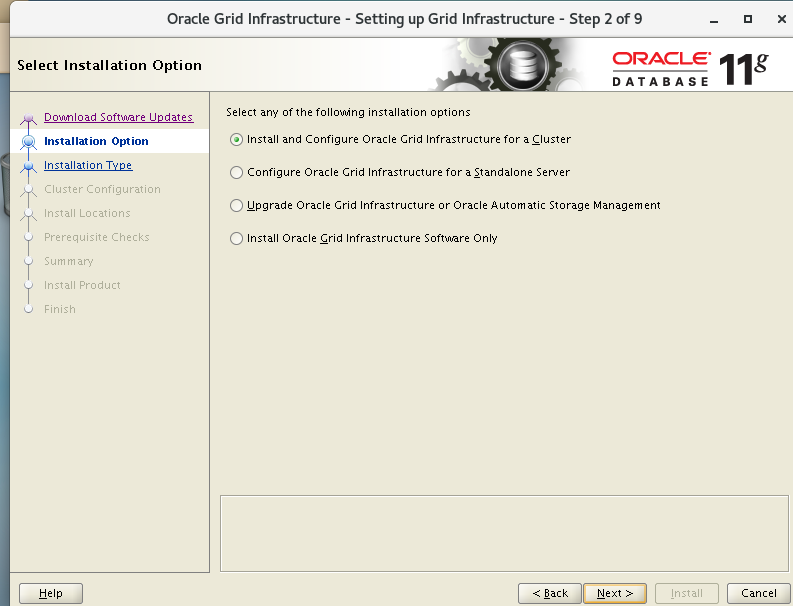

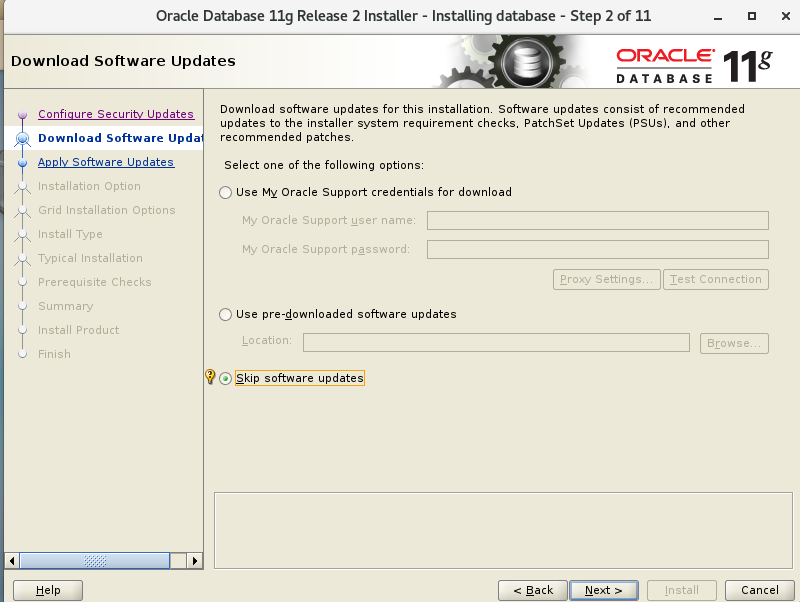

跳过软件更新

安装和配置

高级安装

选择语言

设置集群名称

SCAN Name要保持和/etc/hosts文件中scan ip地址后面的主机名一致。

[grid@racdb01:/home/grid]$cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.40.165 racdb01 192.168.40.175 racdb02 192.168.40.185 racdb03 192.168.183.165 racdb01_privatevip 192.168.183.175 racdb02_privatevip 192.168.183.185 racdb03_privatevip 192.168.40.16 racdb01_vitureip 192.168.40.17 racdb02_vitureip 192.168.40.18 racdb03_vitureip 192.168.40.200 racdbscan01

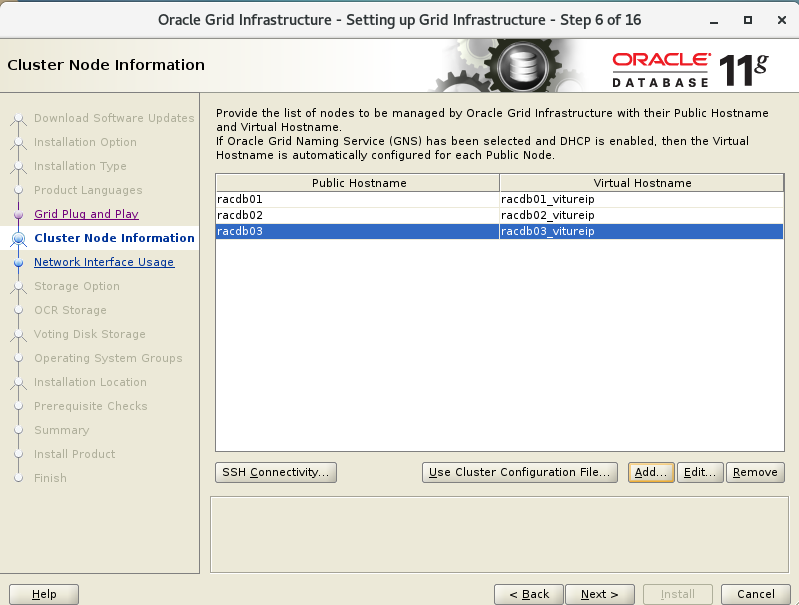

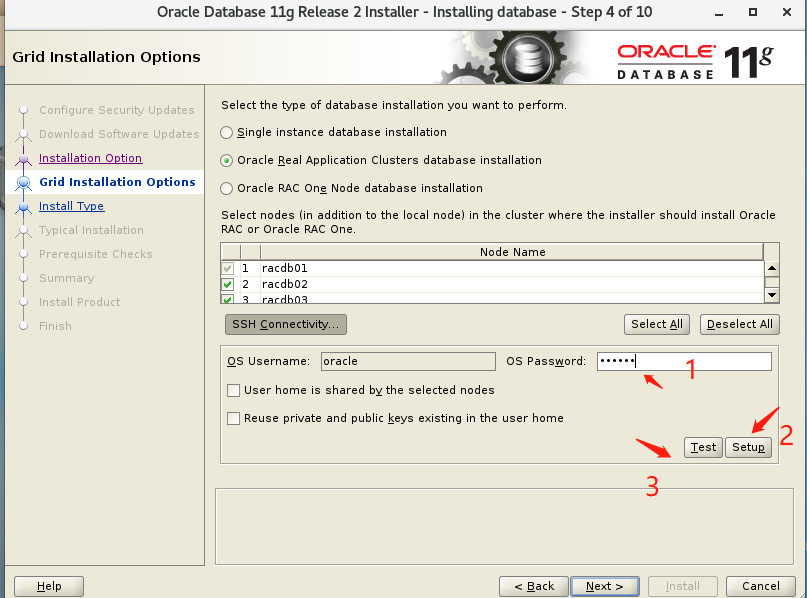

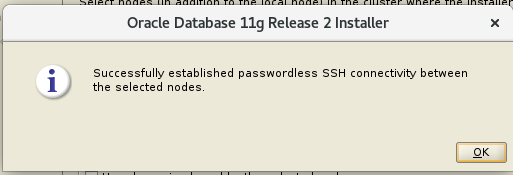

添加集群列表并检查ssh信任关系(每个节点都检查)

检查ssh信任关系

指定网卡

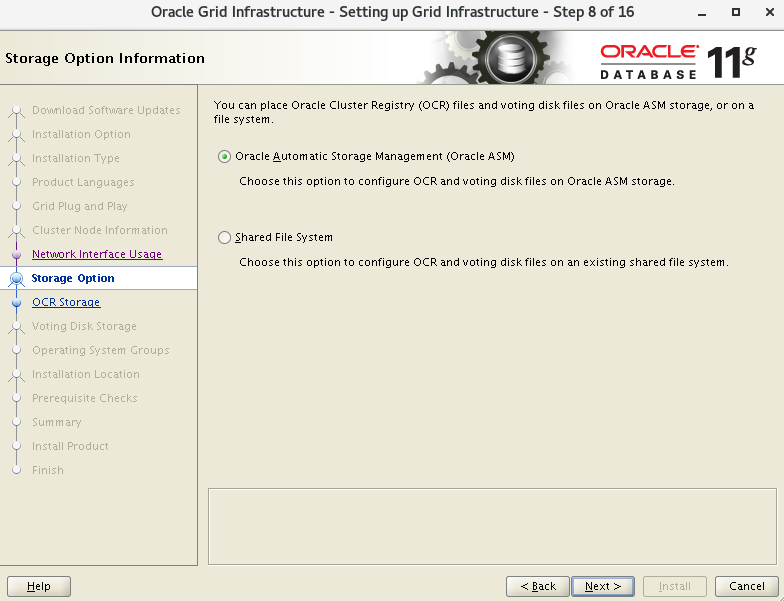

存储选项

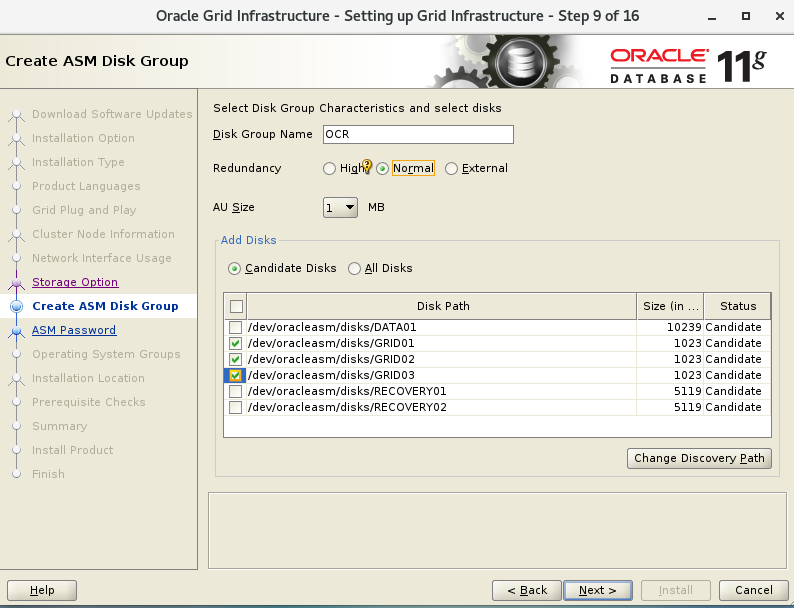

创建磁盘组

ocr磁盘组

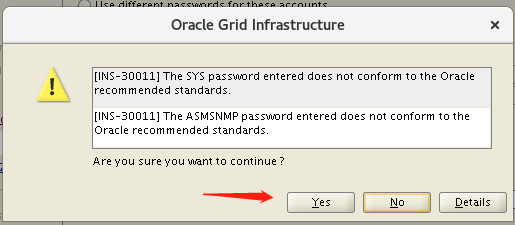

指定asm实例密码

密码设置为oracle

故障隔离支持

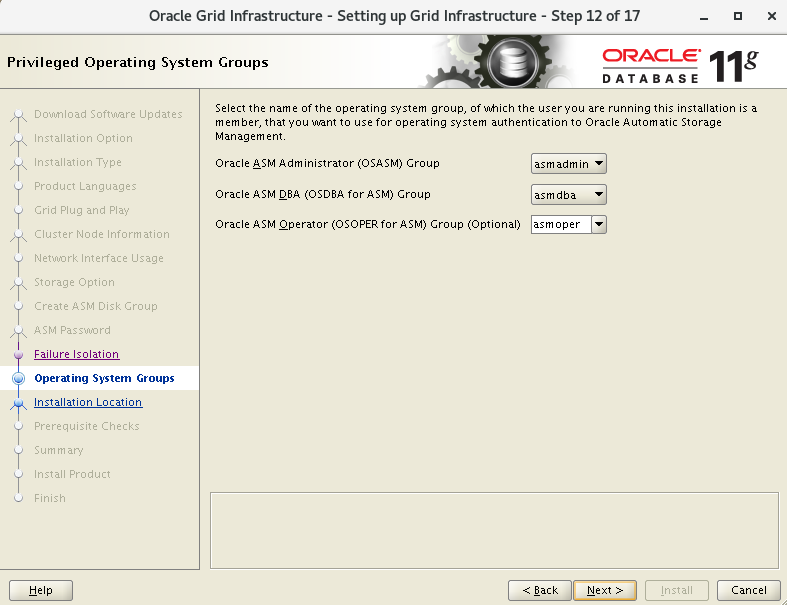

用户组配置

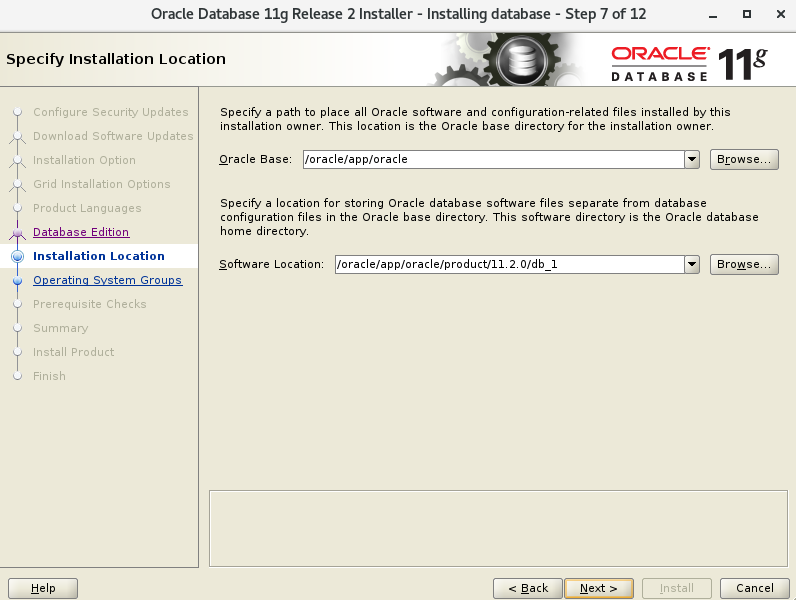

指定安装路径

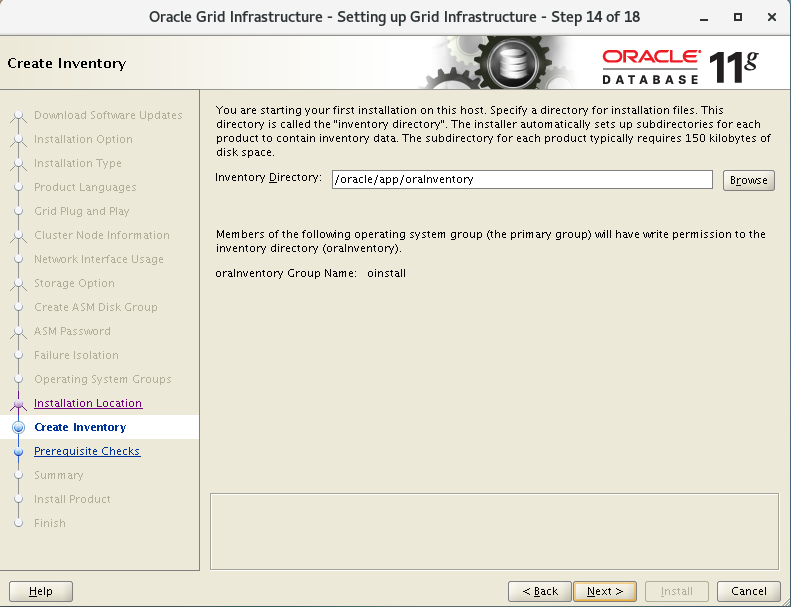

创建库存路径

预安装检测

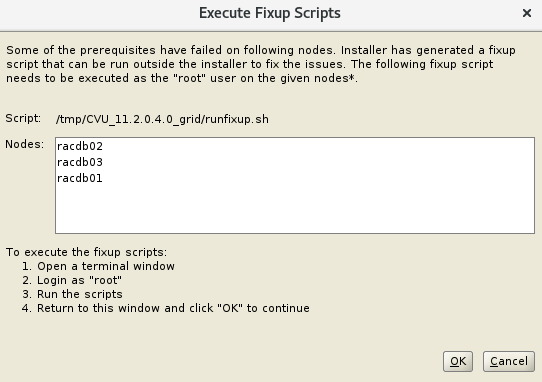

尝试自动修复

根据提示操作如下

--节点1 [root@racdb01 tmp]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log Setting Kernel Parameters... kernel.shmmax = 1610612736 kernel.shmmax = 2061377536 kernel.shmall = 393216 kernel.shmall = 2097152 --节点2 [root@racdb02 disks]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log Setting Kernel Parameters... kernel.shmall = 393216 kernel.shmall = 2097152 --节点3 [root@racdb03 opt]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log Setting Kernel Parameters... kernel.shmall = 393216 kernel.shmall = 2097152

执行完回来点OK,继续检查约束条件

安装pdksh-5.2.14-30.x86_64.rpm包 和 compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm(每个节点都安装)

rpm -ivh compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm rpm -ivh rpm -ivh pdksh-5.2.14-37.el5_8.1.x86_64.rpm 如果提示和ksh冲突执行如下操作先卸载ksh然后再安装pdksh依赖包 rpm -evh ksh-20120801-139.el7.x86_64 rpm -ivh pdksh-5.2.14-37.el5.x86_64.rpm

安装后再次检查

--节点1 [root@racdb01 tmp]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log Installing Package /tmp/CVU_11.2.0.4.0_grid//cvuqdisk-1.0.9-1.rpm Preparing... ################################# [100%] Updating / installing... 1:cvuqdisk-1.0.9-1 ################################# [100%] --节点2 [root@racdb02 ~]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log Installing Package /tmp/CVU_11.2.0.4.0_grid//cvuqdisk-1.0.9-1.rpm Preparing... ################################# [100%] Updating / installing... 1:cvuqdisk-1.0.9-1 ################################# [100%] --节点3 [root@racdb03 disks]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log Installing Package /tmp/CVU_11.2.0.4.0_grid//cvuqdisk-1.0.9-1.rpm Preparing... ################################# [100%] Updating / installing... 1:cvuqdisk-1.0.9-1 ################################# [100%]

忽略所有下一步

执行脚本

根据图片上提示,显示几个脚本在几个节点上执行,那就对应的节点都执行。常规是显示2个脚本,但是环境不一样,显示的需要执行的脚本也不完全一样。

执行脚本的日志位置:/oracle/app/oraInventory/logs/installActions2024-05-22_10-04-06AM.log

[root@racdb01 opt]# /oracle/app/oraInventory/orainstRoot.sh Changing permissions of /oracle/app/oraInventory. Adding read,write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /oracle/app/oraInventory to oinstall. The execution of the script is complete. [root@racdb01 grid]# /oracle/app/11.2.0/grid/root.sh [root@racdb01 etc]# /oracle/app/11.2.0/grid/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /oracle/app/11.2.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /oracle/app/11.2.0/grid/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation Installing Trace File Analyzer OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding Clusterware entries to inittab CRS-2672: Attempting to start 'ora.mdnsd' on 'racdb01' CRS-2676: Start of 'ora.mdnsd' on 'racdb01' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'racdb01' CRS-2676: Start of 'ora.gpnpd' on 'racdb01' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'racdb01' CRS-2672: Attempting to start 'ora.gipcd' on 'racdb01' CRS-2676: Start of 'ora.cssdmonitor' on 'racdb01' succeeded CRS-2676: Start of 'ora.gipcd' on 'racdb01' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'racdb01' CRS-2672: Attempting to start 'ora.diskmon' on 'racdb01' CRS-2676: Start of 'ora.diskmon' on 'racdb01' succeeded CRS-2676: Start of 'ora.cssd' on 'racdb01' succeeded ASM created and started successfully. Disk Group OCR created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-4256: Updating the profile Successful addition of voting disk a57467f8efbc4fc1bfd2743279d18f2a. Successful addition of voting disk 61006c2f61724f9dbfa8be53ba18c44a. Successful addition of voting disk ad17f6f72cb14fc5bf04ce3e5611e30a. Successfully replaced voting disk group with +OCR. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE a57467f8efbc4fc1bfd2743279d18f2a (/dev/oracleasm/disks/OCR01) [OCR] 2. ONLINE 61006c2f61724f9dbfa8be53ba18c44a (/dev/oracleasm/disks/OCR02) [OCR] 3. ONLINE ad17f6f72cb14fc5bf04ce3e5611e30a (/dev/oracleasm/disks/OCR03) [OCR] Located 3 voting disk(s). CRS-2672: Attempting to start 'ora.asm' on 'racdb01' CRS-2676: Start of 'ora.asm' on 'racdb01' succeeded CRS-2672: Attempting to start 'ora.OCR.dg' on 'racdb01' CRS-2676: Start of 'ora.OCR.dg' on 'racdb01' succeeded Configure Oracle Grid Infrastructure for a Cluster ... succeeded问题处理

[client(34579)]CRS-2101:The OLR was formatted using version 3.

--问题描述 [root@racdb01 grid]# /oracle/app/11.2.0/grid/root.sh Adding Clusterware entries to inittab ohasd failed to start Failed to start the Clusterware. Last 20 lines of the alert log follow: 2024-05-21 17:23:43.720: [client(34579)]CRS-2101:The OLR was formatted using version 3. --问题原因 因为centos7 使用的sysemd而不时initd运行继承和重启进程,而root.sh通过传统的initd运行ohasd进程 --解决办法 在centos7中ohasd需要被设置为一个服务,在运行脚本root.sh之前。 #以root用户创建服务文件 cat > /usr/lib/systemd/system/ohas.service << "EOF" [Unit] Description=Oracle High Availability Services After=syslog.target [Service] ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple Restart=always [Install] WantedBy=multi-user.target EOF chmod 777 /usr/lib/systemd/system/ohas.service systemctl daemon-reload systemctl enable ohas.service systemctl start ohas.service systemctl status ohas.service #查看ohas服务状态 [root@testosa ~]# systemctl status ohas.service * ohas.service - Oracle High Availability Services Loaded: loaded (/usr/lib/systemd/system/ohas.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2023-08-27 18:48:57 CST; 6s ago Main PID: 91992 (init.ohasd) Tasks: 1 CGroup: /system.slice/ohas.service `-91992 /bin/sh /etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple Aug 27 18:48:57 testosa systemd[1]: Started Oracle High Availability Services. [root@testosa ~]# #重新执行root脚本 同样在b、c节点执行脚本,第一次执行root脚本都会报错,报错后终止执行,启动ohas后重新执行即可 脚本执行完成后,点okORA-29785: GPnP attribute GET failed with error [CLSGPNP_SIG_WALLETDIF]

--问题描述 执行root.sh脚本报错 CRS-2676: Start of 'ora.cssd' on 'racdb01' succeeded Creation of ASM spfile in disk group failed. Following error occured: ORA-29785: GPnP attribute GET failed with error [CLSGPNP_SIG_WALLETDIF] Configuration of ASM ... failed see asmca logs at /oracle/app/grid/cfgtoollogs/asmca for details Did not succssfully configure and start ASM at /oracle/app/11.2.0/grid/crs/install/crsconfig_lib.pm line 6912. /oracle/app/11.2.0/grid/perl/bin/perl -I/oracle/app/11.2.0/grid/perl/lib -I/oracle/app/11.2.0/grid/crs/install /oracle/app/11.2.0/grid/crs/install/rootcrs.pl execution failed --查看日志 cd /oracle/app/grid/cfgtoollogs/asmca tail -300f asmca-240521PM053344.log [main] [ 2024-05-21 17:33:56.270 CST ] [UsmcaLogger.logInfo:143] Creation of ASM spfile in disk group failed. Following error occured: ORA-29785: GPnP attribute GET failed with error [CLSGPNP_SIG_WALLETDIF] [main] [ 2024-05-21 17:33:56.270 CST ] [OsUtilsBase.deleteFile:1863] OsUtilsBase.deleteFile: /oracle/app/11.2.0/grid/dbs/init+ASM1.ora [main] [ 2024-05-21 17:33:56.270 CST ] [UsmcaLogger.logInfo:143] deleting temp ora file. for sid: /oracle/app/11.2.0/grid/dbs/init+ASM1.ora [main] [ 2024-05-21 17:33:56.270 CST ] [UsmcaLogger.logExit:124] Exiting oracle.sysman.assistants.usmca.backend.USMInstance Method : configureLocalASM [main] [ 2024-05-21 17:33:56.271 CST ] [UsmcaLogger.logExit:124] Exiting oracle.sysman.assistants.usmca.model.UsmcaModel Method : performConfigureLocalASM [main] [ 2024-05-21 17:33:56.271 CST ] [UsmcaLogger.logExit:124] Exiting oracle.sysman.assistants.usmca.model.UsmcaModel Method : performOperation --原因 卸载grid时进程未停止 清理磁盘头方式不对,建议 dd if=/dev/zero of=/dev/sdb1 bs=1024 count=100 方式清理磁盘头 虽然清理磁盘头有以下2种方式: dd if=/dev/zero of=/dev/sdb1 bs=1024 count=100 或 dd if=/dev/zero of=/dev/mapper/ocr01 bs=1024 count=100 --解决办法 进行grid卸载,详细步骤看补充内容中的grid卸载章节。

脚本执行成功后点ok

这个报错是因为我们没有使用DNS做解析,可以忽略,点OK下一步

查看grid服务状态

[root@racdb01 etc]# /oracle/app/11.2.0/grid/bin/crsctl status resource -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.LISTENER.lsnr ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.OCR.dg ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.asm ONLINE ONLINE racdb01 Started ONLINE ONLINE racdb02 Started ONLINE ONLINE racdb03 Started ora.gsd OFFLINE OFFLINE racdb01 OFFLINE OFFLINE racdb02 OFFLINE OFFLINE racdb03 ora.net1.network ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.ons ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE racdb01 ora.cvu 1 ONLINE ONLINE racdb01 ora.oc4j 1 ONLINE ONLINE racdb01 ora.racdb01.vip 1 ONLINE ONLINE racdb01 ora.racdb02.vip 1 ONLINE ONLINE racdb02 ora.racdb03.vip 1 ONLINE ONLINE racdb03 ora.scan1.vip 1 ONLINE ONLINE racdb01补充

Device Checks for ASM

Device Checks for ASM - This is a pre-check to verify if the specified devices meet the requirements for configuration through the Oracle Universal Storage Manager Configuration Assistant. Error: - testosc:PRVF-7533 : Proper version of package "cvuqdisk" is not found on node "testosc" [Required = "1.0.9-1" ; Found = "1.0.10-1"]. - Cause: Cause Of Problem Not Available - Action: User Action Not Available - testosc:PRVF-7533 : Proper version of package "cvuqdisk" is not found on node "testosc" [Required = "1.0.9-1" ; Found = "1.0.10-1"]. - Cause: Cause Of Problem Not Available - Action: User Action Not Available - testosc:PRVF-7533 : Proper version of package "cvuqdisk" is not found on node "testosc" [Required = "1.0.9-1" ; Found = "1.0.10-1"]. - Cause: Cause Of Problem Not Available - Action: User Action Not Available Operation Failed on Nodes: [testosc, testosb, testosa] Verification result of failed node: testosc Details: - Unable to determine the shareability of device /dev/oracleasm/disks/GRID01 on nodes: testosa,testosb,testosc - Cause: Cause Of Problem Not Available - Action: User Action Not Available - PRVF-9802 : Attempt to get udev info from node "testosc" failed - Cause: Attempt to read the udev permissions file failed, probably due to missing permissions directory, missing or invalid permissions file, or permissions file not accessible to use account running the check. - Action: Make sure that the udev permissions directory is created, the udev permissions file is available, and it has correct read permissions for access by the user running the check. Back to Top Verification result of failed node: testosb Details: - Unable to determine the shareability of device /dev/oracleasm/disks/GRID01 on nodes: testosa,testosb,testosc - Cause: Cause Of Problem Not Available - Action: User Action Not Available - PRVF-9802 : Attempt to get udev info from node "testosb" failed - Cause: Attempt to read the udev permissions file failed, probably due to missing permissions directory, missing or invalid permissions file, or permissions file not accessible to use account running the check. - Action: Make sure that the udev permissions directory is created, the udev permissions file is available, and it has correct read permissions for access by the user running the check. Back to Top Verification result of failed node: testosa Details: - Unable to determine the shareability of device /dev/oracleasm/disks/GRID01 on nodes: testosa,testosb,testosc - Cause: Cause Of Problem Not Available - Action: User Action Not Available - PRVF-9802 : Attempt to get udev info from node "testosa" failed - Cause: Attempt to read the udev permissions file failed, probably due to missing permissions directory, missing or invalid permissions file, or permissions file not accessible to use account running the check. - Action: Make sure that the udev permissions directory is created, the udev permissions file is available, and it has correct read permissions for access by the user running the check. 这个报错可以忽略

Network Time Protocol (NTP)

Network Time Protocol (NTP) - This task verifies cluster time synchronization on clusters that use Network Time Protocol (NTP). Error: - PRVF-5507 : NTP daemon or service is not running on any node but NTP configuration file exists on the following node(s): testosa - Cause: The configuration file was found on at least one node though no NTP daemon or service was running. - Action: If you plan to use CTSS for time synchronization then NTP configuration must be uninstalled on all nodes of the cluster. Check Failed on Nodes: [testosc, testosb, testosa] Verification result of failed node: testosc Details: - PRVF-5402 : Warning: Could not find NTP configuration file "/etc/ntp.conf" on node "testosc" - Cause: NTP might not have been configured on the node, or NTP might have been configured with a configuration file different from the one indicated. - Action: Configure NTP on the node if not done so yet. Refer to your NTP vendor documentation for details. Back to Top Verification result of failed node: testosb Details: - PRVF-5402 : Warning: Could not find NTP configuration file "/etc/ntp.conf" on node "testosb" - Cause: NTP might not have been configured on the node, or NTP might have been configured with a configuration file different from the one indicated. - Action: Configure NTP on the node if not done so yet. Refer to your NTP vendor documentation for details. Back to Top Verification result of failed node: testosa #如上删除主节点的 /etc/ntp.conf 文件

Task resolv.conf Integerity

因为测试环境,没有使用DNS,删除resolv.conf文件即可。或者直接忽略该失败

mv /etc/resolv.conf /etc/resolv.conf_bak

清除磁盘头

--问题 [root@icpspnet02 dev]# oracleasm createdisk asm_ocr1 /dev/mapper/asmtotal1 Device "/dev/mapper/asmtotal1" is already labeled for ASM disk "ASM_OCR1" --解决办法 清理磁盘头 dd if=/dev/zero of=/dev/sdb1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdc1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdd1 bs=1024 count=100 dd if=/dev/zero of=/dev/sde1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdf1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdg1 bs=1024 count=100 创建磁盘 oracleasm init 加载内核后再创建 oracleasm createdisk asm_ocr1 /dev/mapper/asmtotal1

grid卸载

停止服务

--查看全局的CRS/OHAS服务状态(包含所有节点) /oracle/app/11.2.0/grid/bin/crsctl check cluster -all --查看全局的集群状态(包含所有节点) /oracle/app/11.2.0/grid/bin/crsctl status res -t --停止全局的CRS/OHAS服务状态(包含所有节点) 正常关不了或某些组件失效时增加-f参数即force强制的意思 /oracle/app/11.2.0/grid/bin/crsctl stop cluster -all [-f] --启动全局的CRS/OHAS服务状态(包含所有节点) /oracle/app/11.2.0/grid/bin/crsctl start cluster -all

卸载GRID软件

su - grid cd /oracle/app/11.2.0/grid/deinstall/ ./deinstall

按照提示默认来就行,最后会有一个要求用root用户执行的

/tmp/deinstall2024-05-21_03-37-42PM/perl/bin/perl -I/tmp/deinstall2024-05-21_03-37-42PM/perl/lib -I/tmp/deinstall2024-05-21_03-37-42PM/crs/install /tmp/deinstall2024-05-21_03-37-42PM/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2024-05-21_03-37-42PM/response/deinstall_Ora11g_gridinfrahome1.rsp" -lastnode

这里要记得把crs的服务全部停止了,如果有部分无法停止,就直接ps -ef|grep pmon

然后KILL -9 PID

完成后,root执行的那个脚本过一会儿就会执行完成,然后deinstall 也可以按回车完成

删除相关目录下内容

--删除/opt/ORCLfmap/ [root@racdb01 opt]# rm -rf /opt/ORCLfmap/ --删除/etc/oracle/ 目录下ocr开头的文件 cd /etc/oracle rm -rf * --删除目录下内容 cd /oracle/app/grid rm -rf * cd /oracle/app/11.2.0/grid rm -rf * cd /oracle/app/oraInventory rm -rf * cd /usr/local/bin/ rm -rf * cd /tmp rm -rf 属主属组是grid oinstall的文件和目录

清空ASM磁盘中的数据

清理磁盘头 dd if=/dev/zero of=/dev/sdb1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdc1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdd1 bs=1024 count=100 dd if=/dev/zero of=/dev/sde1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdf1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdg1 bs=1024 count=100 创建磁盘 oracleasm init 加载内核后再创建 oracleasm createdisk ocr01 /dev/sdb1 oracleasm createdisk ocr02 /dev/sdc1 oracleasm createdisk ocr03 /dev/sdd1 oracleasm createdisk data01 /dev/sde1 oracleasm createdisk data02 /dev/sdf1 oracleasm createdisk data03 /dev/sdg1

问题处理

--问题描述 清除磁盘头后,再次创建磁盘报错 [root@racdb01 grid]# oracleasm createdisk data01 /dev/sde1 Writing disk header: done Instantiating disk: failed Clearing disk header: done --解决办法 重启oracleasm驱动再次创建磁盘成功 [root@racdb01 opt]# systemctl restart oracleasm

grid服务相关操作

单个节点服务操作

--检查CRS/OHAS是否配置开机自启 (只在一个节点上) $GRID_ORACLE_HOME/bin/crsctl config crs 示例 [root@racdb01 etc]# /oracle/app/11.2.0/grid/bin/crsctl config crs CRS-4622: Oracle High Availability Services autostart is enabled. --若CRS/OHAS未配置开机自启,进行配置开机自启(只在一个节点上) $GRID_ORACLE_HOME/bin/crsctl enable crs --停止CRS/OHAS服务(只在一个节点上) $GRID_ORACLE_HOME/bin/crsctl stop crs --查看CRS/OHAS服务状态 磁盘挂载情况 (只在一个节点上) $GRID_ORACLE_HOME/bin/crsctl status resource -t --停止CRS/OHAS服务 (只在一个节点上) $GRID_ORACLE_HOME/bin/crsctl start crs

全局即所有节点服务操作

--查看全局的CRS/OHAS服务状态(包含所有节点) $GRID_ORACLE_HOME/bin/crsctl check cluster -all 示例 [root@racdb01 etc]# /oracle/app/11.2.0/grid/bin/crsctl check cluster -all ************************************************************** racdb01: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online ************************************************************** racdb02: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online ************************************************************** racdb03: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online ************************************************************** --停止全局的CRS/OHAS服务状态(包含所有节点) 正常关不了或某些组件失效时增加-f参数即force强制的意思 crsctl stop cluster -all [-f] --启动全局的CRS/OHAS服务状态(包含所有节点) crsctl start cluster -all

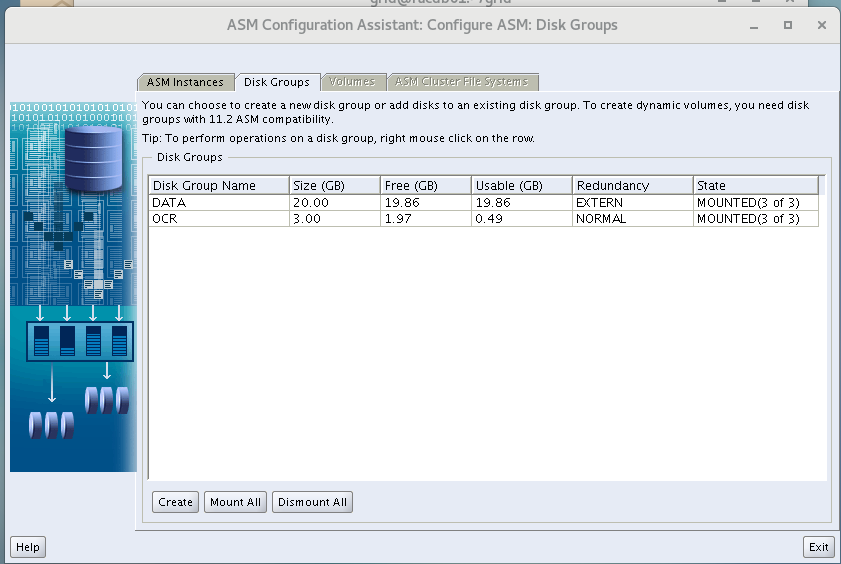

ASM创建磁盘组

为了后期避免归档异常撑爆磁盘,只规划了OCR磁盘和DATA磁盘。

创建DATA磁盘

--调用图形化界面创建data磁盘 su - grid asmca

创建好右下角exit退出即可

扩展

ASM AU size调整

补充

ASM AU

在ASM磁盘组中,最基本空间分配单位是allocation unit,简称AU,每个ASM的磁盘在初始化后都会被切割成一个一个的AU。

当磁盘组创建时,可以通过设置AU_SIZE的属性值,来指定AU的大小(在11.1版本以后),AU的大小可以是1,2,4,8,16,32,64MB,如果不指定AU的大小,默认值是1MB(Exadata下为4MB)。

AU size是磁盘组的属性(不是磁盘的属性,不是ASM实例的属性),因此每一个ASM磁盘组都可以有自己的AU size值。

ASM Extents

一个或多个AU组成一个extent,一个或多个ASM extent组成了一个ASM的文件,因此一个ASM文件逻辑上是由extent组成的。

需要区分物理extent和虚拟extent,一个虚拟extent或者说extent set

- 在外部冗余的磁盘组中,是由一个物理extent组成

- 在normal冗余的磁盘组中,是由至少2个物理extent组成

- 在一个high冗余的磁盘组中,由至少3个物理extent组成

在ASM 11.1版本之前,extent的大小是固定的,在ASM 11.1版本之后,出现了可变extent,可变extent的出现是为了更好的支持大数据文件,减少对ASM和数据库实例的SGA要求、提升创建文件和打开文件等操作的性能,初始化的extent大小等于磁盘组的AU_SIZE设定值,随着一个文件分配的extent越来越多,extent的size会按照4或16倍的AU_SIZE增大。这个特性在文件新建或者resize的时候自动起作用,当然ASM磁盘组的属性值COMPATIBLE.ASM 和COMPATIBLE.RDBMS要设置为大于等于11.1。

一个文件的extent大小变化规律遵循如下方式:

一个文件的前20000个extent set,extent的size等于磁盘组的AU_SIZE的设定值。

接下来的20000个extent set,extent的size等于磁盘组的AU_SIZE*4。

如果一个文件的总extent set数多于40000个,那么后面所有的extent的size等于磁盘组的AU_SIZE*16。

这个可变extent特性有一个烦人的BUG 8898852,更多信息可以参考MOS 965751.1。

译者注:我很怀疑可变extent能起到的效果,因为大部分用户添加数据文件时,一般会指定数据文件的大小,假如AU_SIZE设置成1M,那么只有大于20G的数据文件才会有一些extent的大小为4MB,而就我接触到的DBA来说,可能大部分DBA都会把数据文件的大小设置成20G左右。还有就是虽然Oracle中有bigfile表空间,但是用的人并不多。

ASM Mirroring

ASM的数据镜像功能用来保护数据的完整性,它是通过对一份数据在不同的磁盘多存储一份数据副本来做到这一点。当一个ASM的磁盘组被创建时,ASM管理员可以指定磁盘组的镜像方式:

- External – 不提供镜像保护

- Normal – 2副本

- High – 3副本

ASM镜像的粒度是extent而非磁盘或者block,ASM中的镜像是通过对组成的每一个ASM文件的extent做镜像来实现的。在ASM中,我们可以指定每个文件的冗余级别。例如,一个在normal冗余的磁盘组中的文件,它的每一个extent可能会被镜像一次(默认行为),另一个文件,在相同的磁盘组,可能会被镜像二次,也就是三副本(假设磁盘组中至少有3个failgroup),事实上,ASM元数据文件在normal冗余的磁盘组中就是做的三副本,这里同样需要磁盘组中至少要有3个failgroup。

译者注:在normal冗余的磁盘组中,会有文件被镜像两次,也就是三副本,对于这点不必怀疑,这些文件都是ASM的元数据文件,在后续的文章中,我们会多次看到这种现象。

ASM Failgroups

一个ASM磁盘组可以逻辑上被划分为一个一个的failgroup,failgroup需要在磁盘组创建指定,如果我们在创建磁盘组时,不指定failgroup ,那么ASM会自动把每一个磁盘作为一个failgroup,这一点可能在Exadata上会不一样,Exadata 下所有来自相同存储节点的磁盘会自动放入到一个failgroup ,即使你没有指定failgroup。

normal冗余的磁盘组要求至少2个failgroup,high冗余的磁盘组要求至少3个failgroup,external冗余模式的磁盘组不要求有failgroup。

当一个extent分配给一个具有双副本的文件时,ASM会分配一个primary copy 和 一个 mirror copy,primary copy存储在一个磁盘,而mirror copy会存储在另外一个不同failgroup的磁盘上。

当向ASM磁盘组添加磁盘时,failgroup可以手工指定,ASM会智能的把磁盘添加到正确的failgroup中。

参考链接:Oracle ASM 翻译系列第一弹:基础知识 ASM AU,Extents,Mirroring 和 Failgroups_asm镜像粒度-CSDN博客

配置root环境变量

#把grid用户的Oracle_Home路径加入到每个节点的root环境变量里 [grid@racdb01:/home/grid]$env|grep ORACLE_HOME ORACLE_HOME=/oracle/app/11.2.0/grid [root@racdb01 ~]# grep PATH ~/.bash_profile PATH=$PATH:$HOME/bin:/oracle/app/11.2.0/grid/bin export PATH [root@racdb01 ~]# source .bash_profile [root@racdb02 ~]# grep PATH ~/.bash_profile PATH=$PATH:$HOME/bin:/oracle/app/11.2.0/grid/bin export PATH [root@racdb02 ~]# source .bash_profile [root@racdb03 ~]# grep PATH ~/.bash_profile PATH=$PATH:$HOME/bin:/oracle/app/11.2.0/grid/bin export PATH [root@racdb03 ~]# source .bash_profile

检查ocr信息

[root@racdb01 ~]# ocrcheck Status of Oracle Cluster Registry is as follows : Version : 3 Total space (kbytes) : 262120 Used space (kbytes) : 2728 Available space (kbytes) : 259392 ID : 875014256 Device/File Name : +OCR Device/File integrity check succeeded Device/File not configured Device/File not configured Device/File not configured Device/File not configured Cluster registry integrity check succeeded Logical corruption check succeeded数据库软件的安装

安装日志位置:/oracle/app/oraInventory/logs/installActions2024-05-22_11-50-17AM.log

上传安装包

p13390677_112040_Linux-x86-64_1of7.zip p13390677_112040_Linux-x86-64_2of7.zip chown -R oracle:oinstall /home/oracle

oracle用户解压安装包

su - oracle unzip p13390677_112040_Linux-x86-64_1of7.zip unzip p13390677_112040_Linux-x86-64_2of7.zip #启动vnc [oracle@racdb01:/home/oracle]$vncserver Warning: racdb01:1 is taken because of /tmp/.X11-unix/X1 Remove this file if there is no X server racdb01:1 Warning: racdb01:2 is taken because of /tmp/.X11-unix/X2 Remove this file if there is no X server racdb01:2 Warning: racdb01:3 is taken because of /tmp/.X11-unix/X3 Remove this file if there is no X server racdb01:3 You will require a password to access your desktops. Password: Verify: Would you like to enter a view-only password (y/n)? y Password: Verify: New 'racdb01:5 (oracle)' desktop is racdb01:5 Creating default startup script /home/oracle/.vnc/xstartup Creating default config /home/oracle/.vnc/config Starting applications specified in /home/oracle/.vnc/xstartup Log file is /home/oracle/.vnc/racdb01:5.log

远程使用vnc客户端调用图形化界面进行安装

su - oracle cd database ./runInstaller -jreLoc /etc/alternatives/jre_1.8.0

跳过软件更新

只安装数据库软件

检查各个节点的互信:

选择语言

选择企业版安装

指定安装位置

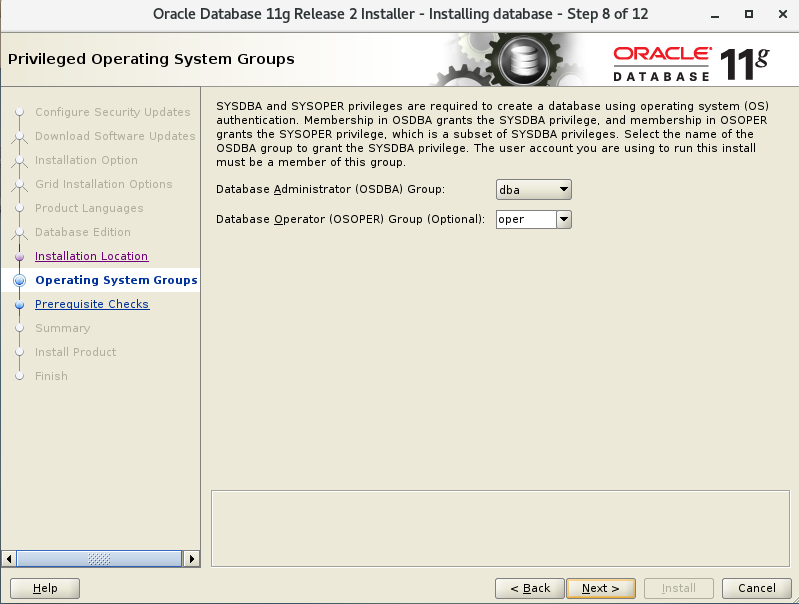

特权操作用户组

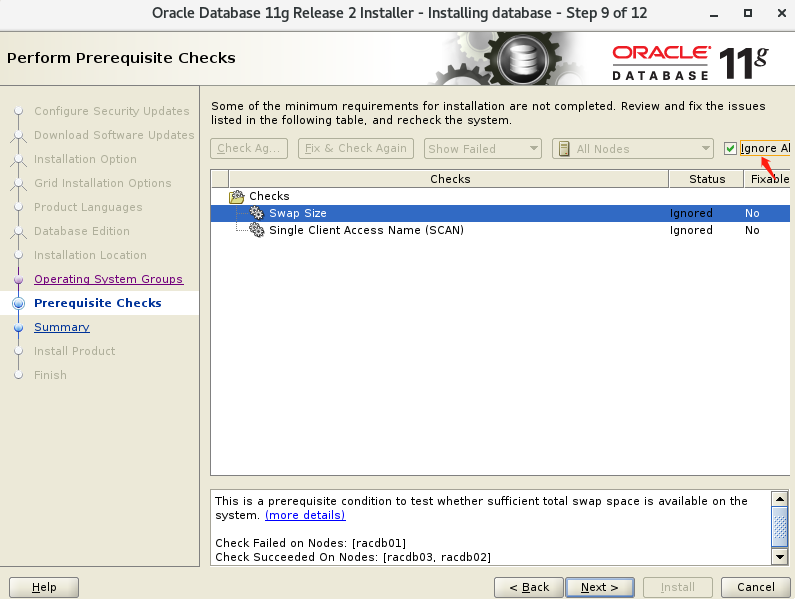

预安装检查

二者可忽略,下一步安装

这是linux7系统的bug,可以规避这个错误(加个参数)

根据提示找见这个文件:/oracle/app/oracle/product/11.2.0/db_1/sysman/lib/ins_emagent.mk

为防止后期再次使用这个文件,先做个备份

cp /oracle/app/oracle/product/11.2.0/db_1/sysman/lib/ins_emagent.mk /oracle/app/oracle/product/11.2.0/db_1/sysman/lib/ins_emagent.mk.bak #如下176行加上 -lnnz11 vim /oracle/app/oracle/product/11.2.0/db_1/sysman/lib/ins_emagent.mk 171 #=========================== 172 # emdctl 173 #=========================== 174 175 $(SYSMANBIN)emdctl: 176 $(MK_EMAGENT_NMECTL) -lnnz11

修改好之后,点Retry

分别在3个节点执行脚本

--节点1 [root@racdb01 ~]# /oracle/app/oracle/product/11.2.0/db_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /oracle/app/oracle/product/11.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions. --节点2 [root@racdb02 etc]# /oracle/app/oracle/product/11.2.0/db_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /oracle/app/oracle/product/11.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions. --节点3 [root@racdb03 etc]# /oracle/app/oracle/product/11.2.0/db_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /oracle/app/oracle/product/11.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions.创建数据库

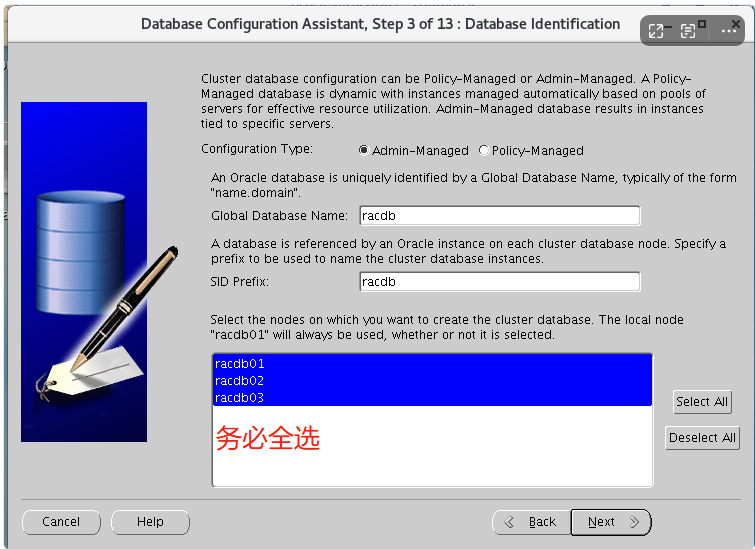

使用dbca命令调出图像界面

redo文件大小更改和组数增加,默认50M 3组redo文件,更改成500M,可视业务情况更改更大。

生产环境redo文件至少200m一个,每个节点(线程)至少5个

集群资源检查

检查后进行重启操作系统验证能开机自启。

[root@racdb01 ~]# /oracle/app/11.2.0/grid/bin/crsctl status res -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.DATA.dg ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.LISTENER.lsnr ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.OCR.dg ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.asm ONLINE ONLINE racdb01 Started ONLINE ONLINE racdb02 Started ONLINE ONLINE racdb03 Started ora.gsd OFFLINE OFFLINE racdb01 OFFLINE OFFLINE racdb02 OFFLINE OFFLINE racdb03 ora.net1.network ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 ora.ons ONLINE ONLINE racdb01 ONLINE ONLINE racdb02 ONLINE ONLINE racdb03 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE racdb01 ora.cvu 1 ONLINE ONLINE racdb01 ora.oc4j 1 ONLINE ONLINE racdb01 ora.racdb.db 1 ONLINE ONLINE racdb01 Open 2 ONLINE ONLINE racdb02 Open 3 ONLINE ONLINE racdb03 Open ora.racdb01.vip 1 ONLINE ONLINE racdb01 ora.racdb02.vip 1 ONLINE ONLINE racdb02 ora.racdb03.vip 1 ONLINE ONLINE racdb03 ora.scan1.vip 1 ONLINE ONLINE racdb01参考链接:VMvare workstation创建Centos7.6虚拟机安装Oracle 11gR2 RAC 三节点(ASMlib管理asm磁盘)_oracle 11g rac asm-CSDN博客

补充

删除数据库实例

su - oracle dbca

日志位置:/oracle/app/oracle/cfgtoollogs/dbca

最后点击Cancel退出

卸载数据库软件

软件卸载操作流程:

执行deinstall 工具

默认回车

卸载数据库的类型:集群数据库,RAC,单节点,RAC的单节点,启动数据库: 3 RAC数据库

数据文件存储的类型:ASM or 文件系统选择 :ASM

是否继续删除y

su - oracle cd /oracle/app/oracle/product/11.2.0/db_1/deinstall ./deinstall --删除用户及用户(可选) userdel -r oracle groupdel dba groupdel oinstall groupdel oper groupdel asmdba

卸载grid

停止服务

--查看全局的CRS/OHAS服务状态(包含所有节点) /oracle/app/11.2.0/grid/bin/crsctl check cluster -all --查看全局的集群状态(包含所有节点) /oracle/app/11.2.0/grid/bin/crsctl status res -t --停止全局的CRS/OHAS服务状态(包含所有节点) 正常关不了或某些组件失效时增加-f参数即force强制的意思 /oracle/app/11.2.0/grid/bin/crsctl stop cluster -all [-f] --启动全局的CRS/OHAS服务状态(包含所有节点) /oracle/app/11.2.0/grid/bin/crsctl start cluster -all

卸载GRID软件

su - grid cd /oracle/app/11.2.0/grid/deinstall/ ./deinstall

按照提示默认来就行,最后会有一个要求用root用户执行的

/tmp/deinstall2024-05-21_03-37-42PM/perl/bin/perl -I/tmp/deinstall2024-05-21_03-37-42PM/perl/lib -I/tmp/deinstall2024-05-21_03-37-42PM/crs/install /tmp/deinstall2024-05-21_03-37-42PM/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2024-05-21_03-37-42PM/response/deinstall_Ora11g_gridinfrahome1.rsp" -lastnode

这里要记得把crs的服务全部停止了,如果有部分无法停止,就直接ps -ef|grep pmon

然后KILL -9 PID

完成后,root执行的那个脚本过一会儿就会执行完成,然后deinstall 也可以按回车完成

删除相关目录下内容

--删除/opt/ORCLfmap/ [root@racdb01 opt]# rm -rf /opt/ORCLfmap/ --删除/etc/oracle/ 目录下ocr开头的文件 cd /etc/oracle rm -rf * --删除目录下内容 cd /oracle/app/grid rm -rf * cd /oracle/app/11.2.0/grid rm -rf * cd /oracle/app/oraInventory rm -rf * cd /usr/local/bin/ rm -rf * --删除/tmp下安装临时信息 cd /tmp rm -rf CVU* rm -rf OraInstall* rm -rf /etc/oraInst.loc rm /etc/oratab

清空ASM磁盘中的数据

清理磁盘头 dd if=/dev/zero of=/dev/sdb1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdc1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdd1 bs=1024 count=100 dd if=/dev/zero of=/dev/sde1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdf1 bs=1024 count=100 dd if=/dev/zero of=/dev/sdg1 bs=1024 count=100 删除磁盘 oracleasm init 加载内核后再创建 oracleasm deletedisk ocr01 oracleasm deletedisk ocr02 oracleasm deletedisk ocr03 oracleasm deletedisk data01 oracleasm deletedisk data02 oracleasm deletedisk data03

删除用户及用户组(可选)

--删除grid用户和属组 userdel -r grid groupdel dba groupdel oinstall groupdel oper groupdel asmadmin groupdel asmoper groupdel asmdba

问题处理

--问题描述 清除磁盘头后,再次创建磁盘报错 [root@racdb01 grid]# oracleasm createdisk data01 /dev/sdg1 Writing disk header: done Instantiating disk: failed Clearing disk header: done --解决办法 重启oracleasm驱动再次创建磁盘成功 [root@racdb01 opt]# systemctl restart oracleasm

- Oracle Linux 7:Unbreakable Linux Network (ULN): Login 或者

![[工业自动化-1]:PLC架构与工作原理](https://img-blog.csdnimg.cn/ce10a1471ed14382bc58364cf8bd5209.png)

还没有评论,来说两句吧...