(1)Kubernetes 区域可采用 Kubeadm 方式进行安装。(5分)

(2)要求在 Kubernetes 环境中,通过yaml文件的方式,创建2个Nginx Pod分别放置在两个不同的节点上,Pod使用hostPath类型的存储卷挂载,节点本地目录共享使用 /data,2个Pod副本测试页面二者要不同,以做区分,测试页面可自己定义。(20分)

(3)编写service对应的yaml文件,使用NodePort类型和TCP 30000端口将Nginx服务发布出去。(10分)

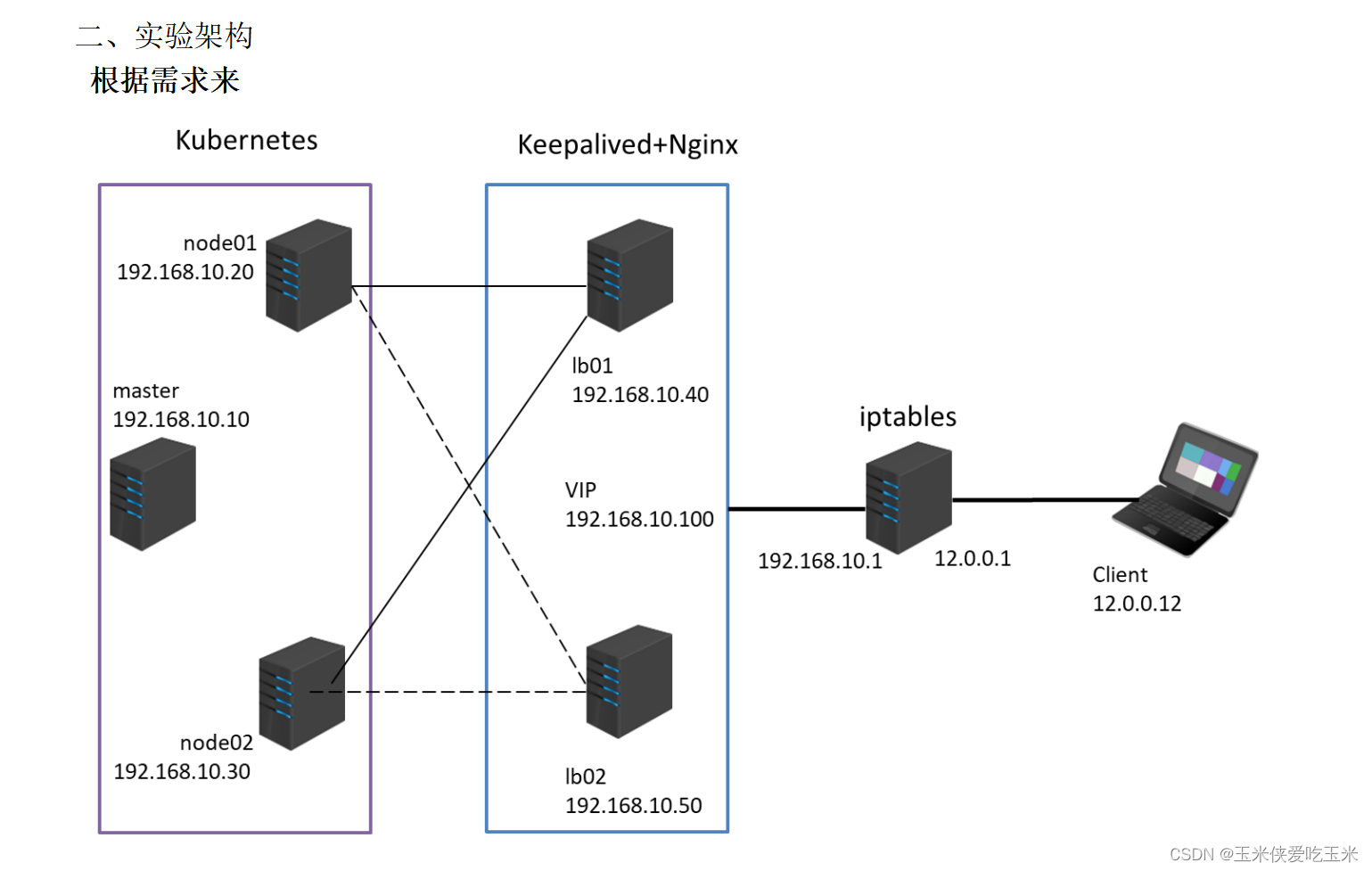

(4)负载均衡区域配置Keepalived+Nginx,实现负载均衡高可用,通过VIP 192.168.10.100和自定义的端口号即可访问K8S发布出来的服务。(20分)

(5)iptables防火墙服务器,设置双网卡,并且配置SNAT和DNAT转换实现外网客户端可以通过12.0.0.1访问内网的Web服务。(10分)

注:编写实验报告,包括实验步骤、实验配置、结果验证截图等。

一 实验环境

192.168.217.99 master01

192.1687.217.66 node01

192.168.217.77 node02

192.168.217.22 ng01 (主负载均衡器)

192.168.217.44 ng02 (备负载均衡器)

192.168.217.55 / 12.0.0.1 iptables (iptables 网关)

12.0.0.12 客户端

二 安装k8s

(1)Kubernetes 区域可采用 Kubeadm 方式进行安装。(5分)

[root@master01 data]#kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

三 启动两个nginx 实例 pod

(2)要求在 Kubernetes 环境中,通过yaml文件的方式,创建2个Nginx Pod分别放置在两个不同的节点上,Pod使用hostPath类型的存储卷挂载,节点本地目录共享使用 /data,2个Pod副本测试页面二者要不同,以做区分,测试页面可自己定义。(20分)(2)要求在 Kubernetes 环境中,通过yaml文件的方式,创建2个Nginx Pod分别放置在两个不同的节点上,Pod使用hostPath类型的存储卷挂载,节点本地目录共享使用 /data,2个Pod副本测试页面二者要不同,以做区分,测试页面可自己定义。(20分)

1,启动第一个 nginx pod

[root@master01 shiyan]#cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx02

spec:

containers:

- name: nginx

image: nginx:1.18.0

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

volumeMounts: #定义如何在容器内部挂载存储卷

- name: web #指定要挂载的存储卷的名称,volumes.name字段定义的名称

mountPath: /usr/share/nginx/html #容器内部的目录路径,存储卷将被挂载到这个路径上

readOnly: false #false表示容器可以读写该存储卷

volumes: #定义宿主机上的目录文件为Pod中可用的存储卷,

- name: web #自定义存储卷的名称

hostPath: #指定存储卷类型为hostPath,

path: /data/ #指定宿主机上可以挂载到pod中的目录或文件

type: DirectoryOrCreate

2, 启动第二个nginx pod

[root@master01 shiyan]#cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx01

spec:

containers:

- name: nginx

image: nginx:1.18.0

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

volumeMounts: #定义如何在容器内部挂载存储卷

- name: web #指定要挂载的存储卷的名称,volumes.name字段定义的名称

mountPath: /usr/share/nginx/html #容器内部的目录路径,存储卷将被挂载到这个路径上

readOnly: false #false表示容器可以读写该存储卷

volumes: #定义宿主机上的目录文件为Pod中可用的存储卷,

- name: web #自定义存储卷的名称

hostPath: #指定存储卷类型为hostPath,

path: /data/ #指定宿主机上可以挂载到pod中的目录或文件

type: DirectoryOrCreate

3, nginx01 做页面

[root@master01 data]#kubectl exec -it nginx01 /bin/bash root@nginx01:/# cd /usr/share/nginx/html/ root@nginx01:/usr/share/nginx/html# echo "this is nginx01" > index.html

4, nginx02 做页面

[root@master01 data]#kubectl exec -it nginx02 /bin/bash root@nginx02:/# cd /usr/share/nginx/html/ root@nginx02:/usr/share/nginx/html# echo "this is nginx02" > index.html

5, 查看状态

[root@master01 shiyan]#kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx01 1/1 Running 0 17m 10.244.1.4 node01nginx02 1/1 Running 0 16m 10.244.2.3 node02 [root@master01 shiyan]#curl 10.244.2.3 this is nginx02 [root@master01 shiyan]#curl 10.244.1.4 this is nginx01 [root@master01 shiyan]#

四 对外发布

1,用NodePort模式 对外发布

[root@master01 data]#cat nginx.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

name: nginx01

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 30000

selector:

app: nginx

type: NodePort

status:

loadBalancer: {}

2, 查看svc

[root@master01 data]#kubectl get svc,pod -owide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/kubernetes ClusterIP 10.96.0.1443/TCP 21d service/nginx01 NodePort 10.96.82.239 80:30000/TCP 22s app=nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/nginx01 1/1 Running 0 44m 10.244.1.4 node01 pod/nginx02 1/1 Running 0 43m 10.244.2.3 node02

3, 将pod 和svc 通过标签选择器绑定

[root@master01 data]#kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS nginx01 1/1 Running 0 48mnginx02 1/1 Running 0 47m [root@master01 data]#kubectl label pod nginx01 app=nginx pod/nginx01 labeled [root@master01 data]#kubectl label pod nginx02 app=nginx pod/nginx02 labeled [root@master01 data]#kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS nginx01 1/1 Running 0 52m app=nginx nginx02 1/1 Running 0 51m app=nginx

4, 查看效果

[root@master01 data]#curl 192.168.217.66:30000 this is nginx02 [root@master01 data]#curl 192.168.217.66:30000 this is nginx01

五 做负载均衡 和高可用

(4)负载均衡区域配置Keepalived+Nginx,实现负载均衡高可用,通过VIP 192.168.10.100和自定义的端口号即可访问K8S发布出来的服务。(20分)

1, nginx 配置文件

将两个node 的对外发布的ip+ 端口 转为指定的30010端口

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.217.66:30000;

server 192.168.217.77:30000;

}

server {

listen 30010;

proxy_pass k8s-apiserver;

}

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

~

2, keepalived 配置文件

主

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_01

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script check_down {

script "/etc/keepalived/ng.sh"

interval 1

weight -30

fall 3

rise 2

timeout 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.217.100

}

track_script {

check_down

}

}

备

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_02

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script check_down {

script "/etc/keepalived/ng.sh"

interval 1

weight -30

fall 3

rise 2

timeout 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.217.100

}

track_script {

check_down

}

}

3, 检测nginx 脚本

脚本

#!/bin/bash

# 检查Nginx进程是否在运行

NGINX_STATUS=$(ps aux | grep '[n]ginx: worker process' | wc -l)

# 设置阈值,判断Nginx是否至少有一个工作进程在运行

THRESHOLD=1

if [ "$NGINX_STATUS" -ge "$THRESHOLD" ]; then

echo "OK - Nginx is running"

exit 0 # 表示服务正常

else

echo "CRITICAL - Nginx is not running"

exit 1 # 表示服务有问题

fi

[root@ng02 keepalived]#chmod +x ng.sh

4, 虚拟ip 飘效果

效果

[root@ng01 keepalived]#systemctl stop nginx [root@ng01 keepalived]#hostname -I 192.168.217.22 192.168.217.100 [root@ng01 keepalived]#hostname -I 192.168.217.22 192.168.217.100 [root@ng01 keepalived]#hostname -I 192.168.217.22 192.168.217.100 [root@ng01 keepalived]#hostname -I 192.168.217.22

5, 效果

访问vip加指定端口 可看到内容

[root@localhost ~]# curl 192.168.217.100:30010 this is nginx02 [root@localhost ~]# curl 192.168.217.100:30010 this is nginx01 [root@localhost ~]# curl 192.168.217.100:30010 this is nginx02 [root@localhost ~]# curl 192.168.217.100:30010 this is nginx01

::

六 iptables网关服务器

(5)iptables防火墙服务器,设置双网卡,并且配置SNAT和DNAT转换实现外网客户端可以通过12.0.0.1访问内网的Web服务。(10分)

1,设置双网卡

ens33:

[root@iptables net]#cd /etc/sysconfig/network-scripts/ [root@iptables network-scripts]#cat ifcfg-ens33 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no NAME=ens33 UUID=c770d08d-12a0-4e69-9a6c-a5457b33d89c DEVICE=ens33 ONBOOT=yes IPADDR=192.168.217.55 NETMASK=255.255.255.0 GATEWAY=192.168.217.2 DNS1=8.8.8.8 DNS2=114.114.114.114

ens36

[root@iptables network-scripts]#cat ifcfg-ens36 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no NAME=ens36 DEVICE=ens36 ONBOOT=yes IPADDR=12.0.0.1 NETMASK=255.255.255.0 GATEWAY=12.0.0.1 DNS1=8.8.8.8 DNS2=114.114.114.114

重启网卡

[root@iptables network-scripts]#systemctl restart network

2, 开启路由转发

[root@iptables network-scripts]#cat /etc/sysctl.conf # sysctl settings are defined through files in # /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/. # # Vendors settings live in /usr/lib/sysctl.d/. # To override a whole file, create a new file with the same in # /etc/sysctl.d/ and put new settings there. To override # only specific settings, add a file with a lexically later # name in /etc/sysctl.d/ and put new settings there. # # For more information, see sysctl.conf(5) and sysctl.d(5). net.ipv4.ip_forward = 1

3, 做iptables 策略

[root@iptables network-scripts]#iptables -t nat -A PREROUTING -i ens36 -d 12.0.0.1 -p tcp --dport 80 -j DNAT --to 192.168.217.100:30010

查看策略

[root@iptables network-scripts]#iptables -t nat -vnL Chain PREROUTING (policy ACCEPT 163 packets, 14674 bytes) pkts bytes target prot opt in out source destination 14 840 DNAT tcp -- ens36 * 0.0.0.0/0 12.0.0.1 tcp dpt:80 to:192.168.217.100:30010 Chain INPUT (policy ACCEPT 28 packets, 4183 bytes) pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 143 packets, 10701 bytes) pkts bytes target prot opt in out source destination Chain POSTROUTING (policy ACCEPT 234 packets, 17504 bytes) pkts bytes target prot opt in out source destination

4, 改两个nginx负载均衡器的 网关 为iptables网关服务器的ens33

ng02:

[root@ng02 keepalived]#route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.217.55 0.0.0.0 UG 100 0 0 ens33 192.168.217.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

ng01:

[root@ng01 keepalived]#route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.217.55 0.0.0.0 UG 100 0 0 ens33 192.168.217.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

重启网络

5, 准备客户端

改客户端网络 网卡指向12.0.0.1

[root@client network-scripts]#cat ifcfg-ens33 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no NAME=ens33 DEVICE=ens33 ONBOOT=yes IPADDR=12.0.0.12 NETMASK=255.255.255.0 GATEWAY=12.0.0.1 DNS1=8.8.8.8 DNS2=114.114.114.114

重启网络

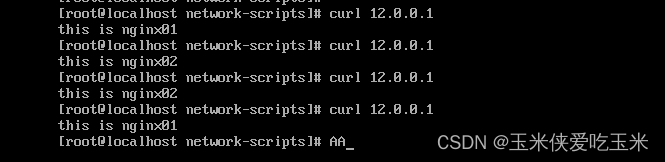

6, 实验效果

客户端访问12.0.0.1 可以看到后面k8s 中的nginx pod 的页面

[root@client network-scripts]# ``` 92.168.217.55 0.0.0.0 UG 100 0 0 ens33 192.168.217.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

重启网络

5, 准备客户端

改客户端网络 网卡指向12.0.0.1

[root@client network-scripts]#cat ifcfg-ens33 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no NAME=ens33 DEVICE=ens33 ONBOOT=yes IPADDR=12.0.0.12 NETMASK=255.255.255.0 GATEWAY=12.0.0.1 DNS1=8.8.8.8 DNS2=114.114.114.114

重启网络

6, 实验效果

客户端访问12.0.0.1 可以看到后面k8s 中的nginx pod 的页面

![[工业自动化-1]:PLC架构与工作原理](https://img-blog.csdnimg.cn/ce10a1471ed14382bc58364cf8bd5209.png)

还没有评论,来说两句吧...